Audio by Joe F. using WellSaid Labs

The State of AI Pronunciation

Among the countless AI-driven innovations, text-to-speech (TTS) technology is a versatile tool that revolutionizes how we interact with content, from advertising and corporate training to educational modules and audiobooks. AI voiceovers can bring a company’s message to life and establish a voice behind the brand, making it more relatable and memorable. Whether it is an advertisement, presentation, video, or any media content, a voiceover ensures clarity, impact, and emotional resonance – ultimately helping to strengthen the connection with the target audience effectively.

And though the TTS industry has progressed over the years, the extensive complexities of the English language and nuances of pronunciation run deep. For example, consider English words that have been borrowed from other languages (triage, résumé, naive, guacamole), words with multiple correct pronunciations (data, route) or even regional preferences (aunt, caramel, apricot). Moreover, consider proper nouns like names and places or non-words like company names, internal jargon, invented words or abbreviations.

Great voiceover is not just about the voice itself, but also about how it performs the provided text. And mispronunciations can distract the listener and prevent them from absorbing the intended message. When brands and companies turn to voiceovers, they must have full control over such pronunciation preferences as it makes their brand voice unique, more credible and personalized.

The nuanced nature of the English language and the various accepted pronunciations of a single word have proven to be a large obstacle across the industry when turning text into realistic, human-sounding voices. Even when AI is provided with an exhaustive list of rules and guidelines for English, the dynamic nature of pronunciation still makes it difficult to replicate a natural human voice.

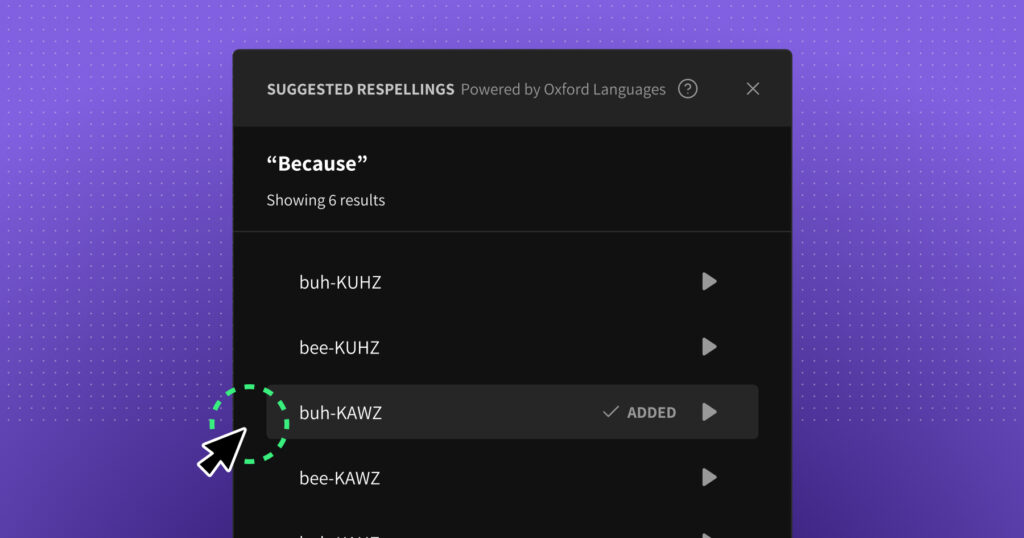

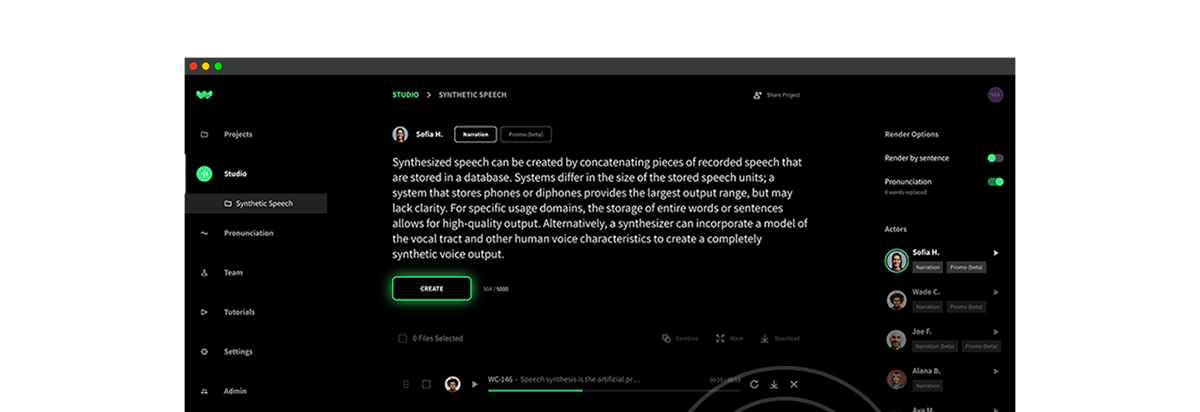

At WellSaid Labs, we sought to solve this issue with our novel Respelling system that powers our voices and allows users to customize and shape pronunciation. Our prototype was built on an open-source pronunciation dictionary and the results were great, however, we wanted the feature to be more robust and guide precise pronunciations of real or fictitious words. We needed to find high-quality, high-volume pronunciation data to power our Respelling system.

Collaborating with Oxford Languages

Recognizing some limitations within our initial prototype, we turned to a trusted resource to elevate our AI voices and improve their ability to pronounce respelled words accurately and consistently. We collaborated with the world’s leading dictionary publisher, Oxford Languages, to move beyond traditional sources of word transcription, significantly increase WellSaid Lab’s training data corpus, and gain more context surrounding each word, ultimately making our AI voices closer to human parity. This collaboration brings together the cutting-edge technology of WellSaid Labs with the renowned linguistic expertise of Oxford Languages, resulting in a groundbreaking advancement in AI voice generation.

Oxford Languages offered the nuanced understanding of pronunciation we were seeking with its English pronunciation dataset consisting of over 500,000 transcriptions, including syllabified and non-syllabified transcriptions and provides accompanying audio for most of these words. By incorporating more than hundreds of thousands of words and audio clips from Oxford Languages’, our Respelling system is trained on not only a larger volume of data but the most accurate data, which is key to ensuring Respelling rules are adhered to consistently and reliably. This ultimately helps us improve our model’s text-to-speech pronunciations and improve our users’ outcomes.

Further, including industry-specific terminology from Oxford Languages’ datasets proved invaluable for WellSaid Labs, particularly in sectors like medicine, where terminology is uncommon and complex. This improved pronunciation capability further helps our users approach technical words when they appear in scripts and produce a more precise outcome.

Looking Forward

The collaboration with Oxford Languages signifies a pivotal moment for WellSaid Labs, showcasing the capabilities of our advanced technology. Respelling systems have been used since 1755 to simplify pronunciation, however, due to their lack of precision, TTS models have struggled to incorporate them. WellSaid Labs’ technology overcomes those struggles and offers unprecedented pronunciation control.

Beyond our novel Respelling system, WellSaid is committed to improving pronunciation accuracy more holistically within the model. And utilizing Oxford Languages audio data is currently in development. Our commitment to pronunciation improvements and control is why our users and creative teams turn to WellSaid Labs as the strongest option on the market for integrating accurate, more fluid, and lifelike pronunciation preferences into their voiceover content – truly bringing presentations, advertisements, and more to life.