Audio by Isabel V. using WellSaid Labs

How many times do you interact with devices and content on a daily basis? If you’re like most, a ton. And more interactions brings a need for greater content volume and variety. To keep up, content creators strive to captivate audiences, which has created a paradigm shift.

Immersive technologies are growing more and more capable of fostering deeper connections. Likewise, the evolution in how we interact with these digital mediums, as well as the modern tools we employ to educate, inform, and entertain, garner increased significance.

But first, let’s take it back to 1979. The advent of the Graphical User Interface (GUI) was pivotal, enabling billions to harness the power of computers. It introduced visual elements, catering to our intrinsic need for visual stimuli, and simplified the interaction with machines. Over the years, as our reliance on digital technology burgeoned, so did our desire for harmonious interaction. Enter voice technology.

Voice is a powerful mode of bridging humans and computers, building upon the legacy of GUI. The right voice can captivate our attention, enhance retention, and immerse us in compelling narratives. Today, creatives, educators, and product developers are adopting voice as an interface—encapsulating a novel medium to personalize content.

As such, in a world inundated with vocal interactions, the quest for a voice that resonates and captivates becomes paramount. But it starts with finding just the right voice for your organization.

What is AI Voice?

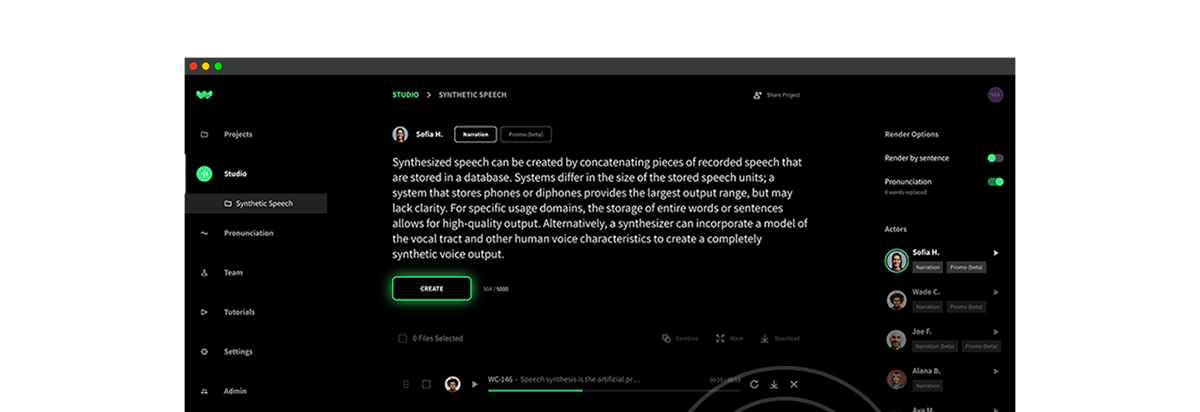

An AI voice is a meticulously crafted, AI-powered narrator, boasting a natural and authentic sound. At WellSaid Labs, we bring what we call “Voice Avatars” to life using our proprietary AI model to mimic the voices of real individuals as closely as possible. All with explicit permission, of course.

💡 Learn more about our ethical process for creating AI voices here!

In close collaboration with brands and voice talent, we sculpt the style and personality of each AI voice. Thereafter, we tailor it to the content it will breathe life into. And this process of training generally works the same across text to speech technologies.

After all, synthetic voices are not a novel concept. However, there’s a stark contrast between the stereotypical robotic utterances and the rich, human-like quality of a well-formed AI voice.

An aI voice can transcend the mere act of scaling voice production. It encapsulates the essence of the individual behind the voice, creating a model that is as unique as its human counterpart. Brands and content creators, striving for excellence, recognize the distinction between mass production and crafting content of the highest caliber for their audience’s enjoyment.

Speaking more broadly, an AI voice has the capacity to transform plain text into dynamic voiceovers in real time. The ease of this process empowers anyone to create a voiceover with just a few clicks, akin to composing an email. AI voices find their applications in myriad domains, ranging from corporate training and voice-enhanced applications to diverse media productions. They offer a scalable and economically viable solution to connect with customers and audiences in a manner previously unattainable without AI.

What is a custom AI voice?

A custom AI voice is essentially a digital representation of a human voice, painstakingly crafted and tailored to meet specific preferences and requirements. The process of creating a custom AI voice involves voice cloning, a sophisticated technique wherein the voice AI analyzes and learns from a sample of human speech to generate a vocal model that can replicate the nuances, tone, and articulation of the original voice.

This technology leverages the power of artificial intelligence to transform text into spoken words, creating a text-to-speech voice that sounds incredibly natural and lifelike. The customization aspect means that businesses and individuals can create a vocal identity that aligns perfectly with their brand or personal image, ensuring consistency across various platforms and interactions. So whether you’re curious about how to make an AI voice for personal use or for professional branding, understanding the intricacies of custom AI voice is essential.

💡Discover WellSaid Labs’ process for building custom voice here!

Why do brands need their own AI voice?

In a world awash with content, brands face the Herculean task of forging genuine connections with their audience. In this, differentiating one’s narrative becomes a formidable challenge. However, AI voices emerge as invaluable allies, empowering brands to craft and convey stories that encapsulate their ethos and values.

For brands aiming to create relevant and impactful content in today’s digital landscape, mastering the art of voice integration is imperative. Engaging narratives require compelling narrators, and AI voice is capable of delivering just that.

Choosing an AI voice generator over traditional methods yields operational efficiencies, cost savings, and enhances creative freedom. With easier and quicker retakes, and the elimination of workflow bottlenecks, AI voice augments your team’s capacity to keep content fresh, while maintaining agility and nimbleness.

Bottom line? Owning a custom AI voice grants you exclusive rights to a synthetic voice, tailor-made for your brand.

AI Voice Creation Tutorial: What are the necessary steps for creating an AI voice?

Creating an AI voice involves several steps that combine advanced technology and sophisticated algorithms. These steps ensure that the generated voice is natural-sounding while also possessing the desired characteristics.

1. Data Collection

The first step in creating an AI voice is collecting a vast amount of high-quality data. This data includes recordings of human voices, which serve as the foundation for training the AI models. The more diverse and varied the data, the better the AI voice can mimic different accents, tones, and speech patterns.

2. Preprocessing

Once the data is collected, it goes through a preprocessing stage. This includes removing any noise, normalizing audio levels, and dividing the data into smaller sections, such as sentences or phrases. Preprocessing helps ensure that the AI models have clean and consistent data to work with.

3. Training the AI Model

Next, the preprocessed data is used to train the AI model. This involves using deep learning techniques, such as recurrent neural networks (RNNs) or convolutional neural networks (CNNs), to analyze the patterns and nuances in the voice recordings. The AI model learns to generate speech by understanding the relationships between different phonemes, words, and sentences.

4. Fine-tuning and Optimization

After the initial training, the AI model undergoes a fine-tuning process. This entails refining the model’s parameters and making adjustments to improve the generated voice’s quality, intelligibility, and naturalness. Optimization techniques, such as adjusting the model’s architecture or incorporating additional data, are used to further enhance the AI voice’s performance.

5. Testing and Evaluation

Once the model is fine-tuned, it’s put through rigorous testing and evaluation processes. A major aspect of this is analyzing the generated voice for any inconsistencies, errors, or unnatural sounding elements. The AI voice is evaluated based on various criteria, such as pronunciation accuracy, intonation, and overall fluency.

6. Deployment and Integration

Once the AI voice passes the testing and evaluation phase, it is ready for deployment and integration into real-time text-to-speech systems. This allows companies to use the AI voice for various applications, such as voiceovers for videos, virtual assistants, or interactive customer experiences.

Which tools are necessary for creating an AI voice?

Creating an AI voice involves the use of various essential tools. These tools are crucial in training and fine-tuning the voice model to achieve the desired output.

Text to Speech (TTS) Engine: A TTS engine is the core component of an AI voice generator. It converts written text into spoken words by applying complex algorithms and linguistic rules. human voice.

Deep Learning Frameworks: Deep learning frameworks like TensorFlow, PyTorch, and Caffe provide the foundation for training and developing AI voice models. These frameworks offer a wide range of tools and libraries for building and optimizing neural networks, which are crucial for creating accurate and natural-sounding voices.

Speech Data: High-quality and diverse speech data is essential for training an AI voice. This data is used to teach the model the nuances of human speech, including pronunciation, intonation, and emotion. The more data available, the better the AI voice can mimic human speech.

Natural Language Processing (NLP) Tools: NLP tools help in preprocessing and analyzing textual data. These tools enable the AI voice model to understand the input text, extract relevant information, and apply appropriate intonation and emphasis. NLP techniques, such as sentiment analysis, can also be used to make the AI voice sound more expressive and natural.

Audio Processing Software: Audio processing tools are used to enhance and manipulate the generated voice audio. These tools can perform tasks like noise reduction, pitch correction, and audio effects to ensure that the AI voice output is of high quality and meets the desired specifications.

Evaluation and Testing Tools: Evaluation and testing tools are necessary to assess the performance of the AI voice. These tools measure various aspects of the voice, such as pronunciation accuracy, intonation, and overall fluency. They help identify any potential issues or areas for improvement, allowing developers to fine-tune and optimize the voice model.

Cloud Computing Infrastructure: The training process for creating an AI voice requires substantial computational resources. Cloud computing infrastructure, such as Amazon Web Services (AWS) or Google Cloud Platform (GCP), provides the necessary scalability and computing power to train large-scale neural networks. It allows developers to efficiently process and train the AI voice models.

Developer Tools and APIs: Developer tools and APIs (Application Programming Interfaces) enable developers to integrate the AI voice into their applications and systems. These tools provide the necessary documentation, code libraries, and resources to facilitate the integration process. They allow companies to easily incorporate the AI voice capabilities into their products and services.

How can I improve the quality of my AI voice?

Artificial intelligence has come a long way in recent years. Whether you’re using AI to generate voiceovers for your company’s videos, phone systems, or any other application, it’s essential to achieve the highest possible quality for an optimally impactful and engaging experience.

Employ the right AI voice generator

The quality of your AI voice starts with the voice generator you choose. Look for a solution that offers a wide variety of natural-sounding voices to match your brand’s tone and style. Additionally, consider factors such as language support, customization options, and the provider’s reputation for accuracy and reliability.

Quick tips for vetting TTS:

- Prioritize naturalness

- Cater to your audience’s dialects and languages

- Explore customization options

- Test multiple voices

- Evaluate against your budget

- Take advantage of free trials

- Consider the long-term usage

Optimize Text Preparation

The way you prepare your text can significantly impact the quality of the generated voice. Ensure that your text is well-written, clear, and concise. Avoid complex sentence structures or ambiguous phrases that may confuse the AI model. Using proper punctuation and formatting in your script can also help the AI voice deliver a more natural and fluent performance.

Provide Pronunciation Guidance

AI voice models may struggle with pronouncing certain words or names correctly. To overcome this challenge, provide pronunciation guidance or include phonetic spellings for any unusual or industry-specific terms. This will help the AI voice generator deliver accurate and coherent results.

Customize the Voice

Many AI voice solutions offer customization options to tailor the voice to your specific needs. Experiment with settings such as pitch, speed, and emphasis to find the perfect balance for your content. This can help create a unique and memorable voice that aligns with your brand identity.

Train the AI Model

Some AI voice generators allow you to train the model with your own data. By providing specific examples and recordings of the desired voice style, you can improve the accuracy and naturalness of the generated voice. This process may require additional time and resources but can result in a more personalized and high-quality AI voice.

Regularly Evaluate and Test

Continuously evaluate and test the performance of your AI voice to identify any areas that need improvement. Listen to generated voice samples and gather feedback from users or focus groups. This feedback can help you identify any issues with pronunciation, intonation, or overall quality, allowing you to make necessary adjustments and enhancements.

Use High-Quality Speech and Audio Data

The quality of the speech data used to train the AI model can directly impact the output. Ensure that the speech data is clean, diverse, and representative of the target audience. This allows the AI model to better understand and replicate natural speech patterns, resulting in a more realistic and high-quality voice.

Leverage Cloud Computing Infrastructure

AI voice generation requires significant computational power. Applying cloud computing infrastructure can help ensure faster processing times and scalability—especially for large-scale voice generation projects. Consider partnering with a provider that offers robust cloud infrastructure for seamless and efficient AI voice generation.

How do I ensure a realistic AI voice when I create audio with text to speech?

Whether you’re a company looking for a voiceover solution or an individual interested in creating lifelike voices for personal projects, you’re likely aiming for something natural sounding. Here are some tips to help you achieve just that.

- Tip: Train your AI model with diverse data.

- Why? This ensures that the model learns to accurately mimic different speech patterns, accents, and emotions. Include variations in age, gender, and regional dialects to make the voice more versatile and authentic.

- Tip: Pay attention to intonation and emphasis.

- Why? By analyzing and replicating the patterns of emphasis and intonation found in human speech, you can make your AI voice sound more lifelike

- Tip: Incorporate pauses and breaths.

- Why? These breaks in speech help to create a more realistic and human-like voice. When training your AI voice model, make sure to incorporate these pauses and breaths at appropriate intervals. This will add a sense of authenticity to the generated voice.

- Tip:. Use high-quality, clear recordings as input data to ensure that the generated voice sounds crisp and professional. Avoid using low-quality or noisy recordings, as they can introduce artifacts and distortions into the voice output.

- Why? The quality of the audio samples used to train your AI voice model can greatly impact its naturalness.

- Tip: Continuously iterate and refine your AI voice model based on user feedback and performance metrics. Monitor how well the voice mimics human speech and make adjustments as needed.

- Why? Achieving a natural-sounding AI voice is an ongoing process. Regular updates and improvements will help to enhance the naturalness of the generated voice over time. Likewise, take notice of script nuances to better understand the algorithms dictating speech.

- Tip: Test and adjust in real-time.

- Why? This iterative approach will help you fine-tune the voice in real-time, resulting in a more realistic and high-quality voice.

The WellSaid Advantage

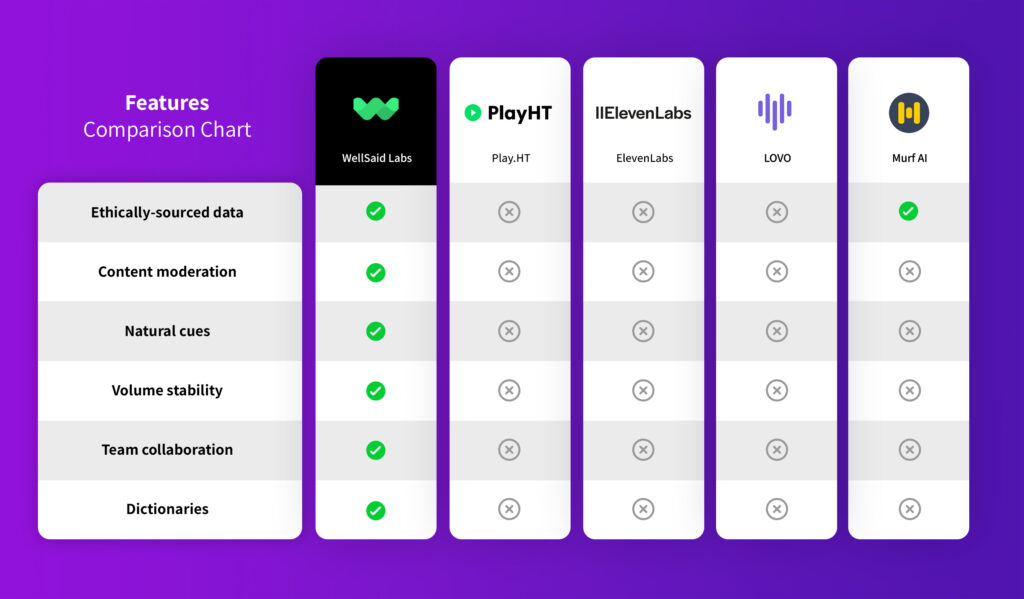

WellSaid Labs stands at the forefront of voice AI innovation, offering an AI voice generator that transcends the capabilities of conventional text-to-speech technologies. One of the primary benefits of using WellSaid Labs is the unparalleled quality of the AI voices produced. Our advanced AI voice cloning algorithms ensure that our generated voices are never robotic monotone. Instead, they’re a rich, expressive, and natural-sounding voice that captures the essence of human speech.

Furthermore, the versatility of our Voice Avatars makes them an invaluable asset for various applications. From creating engaging and relatable content for digital platforms to enhancing user experience in voice-assisted devices, the potential use cases are vast. By integrating our technology, businesses can establish a unique vocal brand identity, fostering a stronger connection with their audience.

And, lastly, the efficiency of our AI voice generator significantly reduces the time and resources required to produce high-quality voice content. Meaning–the content creation process is streamlined plus rapid scalability is enabled, ensuring that businesses can quickly adapt to changing needs and demands.

How good can voice cloning be?

WellSaid Voice Avatars embody the pinnacle of natural-sounding, computer-generated voices, mirroring the original voice actor’s style with precision. Our unwavering commitment to quality and authenticity culminated in a groundbreaking achievement in June 2020, when WellSaid became the first text-to-speech company to attain Human Parity.

In a comprehensive evaluation, participants compared a series of recordings, spanning both synthetic and human voices. Tasked with rating the naturalness of each recording, participants bestowed an average score of 4.5 to human voice actors—a benchmark also attained by our synthetic voices.

What does this monumental achievement mean for you?It attests to the unparalleled quality of a WellSaid Avatar, ensuring that your audience experiences a voice indistinguishable from a human narrator. This is pivotal for content creators who aspire to engage, inspire, and move their audience. At WellSaid, our dedication extends to empowering creators to produce content of the highest echelon, and our AI voices are instrumental in this pursuit.