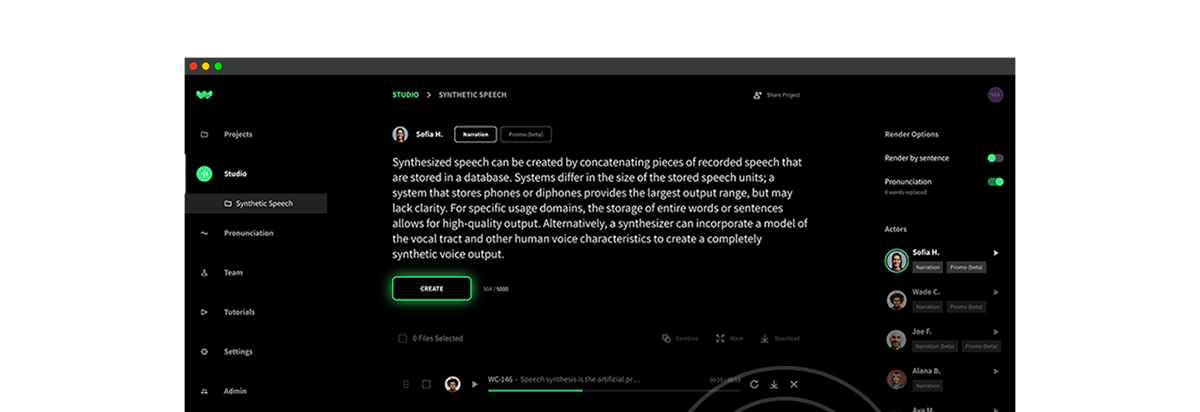

Audio by Paula R. using WellSaid Labs

Ever watched a movie without its score? Or imagined a game without its defining sound effects? Audio is an unsung hero, subtly crafting experiences that evoke emotion, tell stories, and define entire genres. Now, imagine a world where this symphony of sounds, from the deepest bass notes to the gentlest whispers, could be synthesized by a single generative AI model.

Welcome to the world of Audio Foundation Models (AFM). 🌎🗣️

Understanding Foundation Models beyond the technical jargon

Foundation models are often described in vast technical terms. But, at their core, they’re simply technology trained on massive amounts of data to subsequently tackle a broad array of tasks. A model is considered a Foundation Model if it is capable of performing at, or near, state-of-the-art levels in those tasks. Much like the original Foundation Models, BERT and ELMo demonstrated.

Yet for the average user, these technical intricacies become less significant. In fact, we have seen that model performance and acceptance is not strictly tied to the size of the model or the amount of data it was trained on. Consumers don’t care about how many billion tokens a model is trained on. What truly matters for a model to be considered foundational is 1. its generality, and 2. its impact.

As WellSaid’s CTO and co-founder, Michael Petrochuk, describes, “When I think about the broader conversation on Foundation models, I think this is what matters most: the model’s broader capability, impact, and generality. The original and technical definition for a Foundation model isn’t as relevant to the conversation today.

An industry perspective on the need for AFMs

Until now, we have yet to see a foundation model successfully implemented in the audio space. And despite the industry’s progression, music generators, often branded as foundation models (with terms like “LLaMA of audio”), still fall short of the “foundation” mark.

While you may have heard a “sample” of AFM-based synthetic speech, we’d be willing to bet against sufficiently replicating and extending this audio. Think about it: if a music generator can only churn out 30 seconds of background sound, can it truly be deemed foundational? That’s more of a trick than a well-established trade.

For a real world example, are you familiar with VoiceBox’s latest “Foundational Model”? Regrettably, it often mispronounces words, as referred to in their blog as “Word Error Rates.” It’s challenging to believe that a TTS system with such pronunciation issues aligns with the real essence of a Foundation Model. While their ideas and demonstrations are interesting, the prototype leaves lots to be desired before it can be considered Foundational.

But at WellSaid Labs, we conceptualize AFMs differently. We envision an AFM capable of producing diverse types of audio—be it voice-overs, music, or ambient sounds. This AFM should not only generate a wide range of content but also ensure its realism and authenticity. Much like OpenAI’s ChatGPT has become a critical tool for content creators, almost overnight, WellSaid Labs is committed to producing the audio generation tools content creators need in order to craft the perfect listening experience for their consumers.

The path ahead for Audio Foundational Models

Text generation models like ChatGPT have made a profound impact on the industry and in a similar way, AFMs of the future will revolutionize the audio industry. The next wave of AFMs will offer tools for generating diverse audio content at an unprecedented scale.

As pioneers in this domain, WellSaid Labs’ journey has been meticulously planned.

As WellSaid’s CTO and co-founder, Michael Petrochuk, discusses, “We are developing the building blocks and invariants for an Audio Foundation Model, and are testing those on progressively more and more challenging speech generation tasks. We are excited to soon take on audio more broadly.”

These building blocks have allowed us to model human voices with unparalleled accuracy, consistency, and reliability. Through our work, we’ve developed a model adept at capturing various accents, languages, and styles. Our model is demonstrating its ability to generalize over complex hard-to-pronounce words, grasping the underlying context, and producing clips of varying lengths without a dip in quality–laying an exciting foundation for us to be able to model audio more generally.

So far on the road to AFM, we have achieved:

Multi-Speaker Generation: We can model multiple speakers in one model. Now, our model supports 50+ Voice Avatars.

Long-Form Audio Generation: We can generate indefinitely with high quality. Internally, our tests yielded as much as 8 hours of quality audio without post-processing. We have yet to see any other team achieve this.

Human Parity MOS: We conducted a study using 3rd party evaluators and our Voice Avatars were as natural as their original recordings.

No Degradation/Gibberish: Our team has listened to thousands of clips and have resolved every instance of degradation.

Volume Consistency: We are measuring model consistency and have ensured that within clips, and between clips, the volume stays consistent.

Non-standard Word Support (OOV Support): We implemented layers to normalize and standardize text, so our model can respond consistently and accurately to non-standard words.

No Word Skipping: Unlike various famous models like VALL-E, we have ensured our model doesn’t miss any words in the script.

Accent Support: We have worked with voice actors and script writers from around the world to authentically model their accents.

Contextual Pronunciation: Our model takes the entire context into consideration, and you can see that by how it adjusts its pronunciation based on context and dialect.

Complex Pronunciation: We have had success and are closely monitoring the model’s ability to handle industry specific terminology. Usually, this is a good indicator that the model is generalizing beyond common words.

Clean Audio Cutoff: Importantly, we’ve ensured our model understands audio breaks as much as it understands content, so it cleanly cuts off audio generation at the expected stopping point.

Dynamic Context: We are testing and ensuring our model is considerate of the changing context throughout a script.

Phonetic Cues: We have built a novel Respelling tool and have proven that our model responds to phonetic guidance, including generating accurate consonant sounds, vowel sounds, and syllabic emphasis.

Delivery Cues: And our brand new research is now showing that our model responds accurately and consistently to cues that adjust pacing, pausing, and emphasis.

With this work, we are continuing to stretch our model’s ability to confidently and reliably handle more and more challenges. We are well on our way to demonstrating that we can build a foundational model that performs for our users, always.

Overcoming the complexities of audio modeling

Our forthcoming model enhances content delivery by offering users unprecedented direction over its interpretation. Beyond just pronunciation and pausing guidance, our approach allows for adjustments to speaking pace and loudness levels.

Specifically:

- It generalizes across all our speaker profiles, whether by style or accent.

- It is adaptable to various content lengths, from a single word, to phrases, sentences, and even entire scripts.

- It provides a broad range of loudness and pace guidance, from speaking softly to speaking loudly, and from enunciating words to rushing their delivery.

So, what does this mean for creators like you? It translates to distinctive performances that capture every nuance, consistently.

Central to our mission is the creation of a model that can generate a wide array of audio types, emphasizing realism and responsiveness. Our concentrated efforts have been channeled into modeling the voices of real individuals as authentically, consistently, and reliably as possible.

WellSaid’s claim to AFM

WellSaid Labs, for the first time in the industry, has discovered an approach that we are introducing to the public. Through research and development, our new model combines the strengths of older approaches while avoiding their weaknesses.

Our upcoming release seeks to push these boundaries even further.

Significantly, producing a model that is generally capable of producing any, or most types of audio, is our main objective. We are looking for that audio to be realistic, and for the model to be directable. Our focus until now has been on modeling the voices of real people as authentically and reliably as possible. And through these efforts we’re nearing a fairly broad foundation model for speech.

Broader implications beyond speech

So, where does all this lead us in the context of AFMs? The functionalities we’ve crafted aren’t confined to speech alone. In essence, we’re paving the way for users to generate diverse audio content, much like a maestro orchestrating a symphony. The AFMs of the future will empower everyone to be the conductor of their audio experience.

AFMs represent more than just another tech buzzword—they symbolize the future of audio content generation. At WellSaid Labs, we’re not just chasing this dream. We’re crafting it, ensuring that every individual can have a voice (or sound) that’s as authentic and unique as they are.

We are creating audio for all.