Audio by Ramona J. using WellSaid Labs

AI solutions are truly only as powerful as their commands. And that’s certainly true in the realm of text-to-speech (TTS) technologies, where Speech Synthesis Markup Language (SSML) enables developers and content creators to craft detailed instructions on how speech should sound when synthesized.

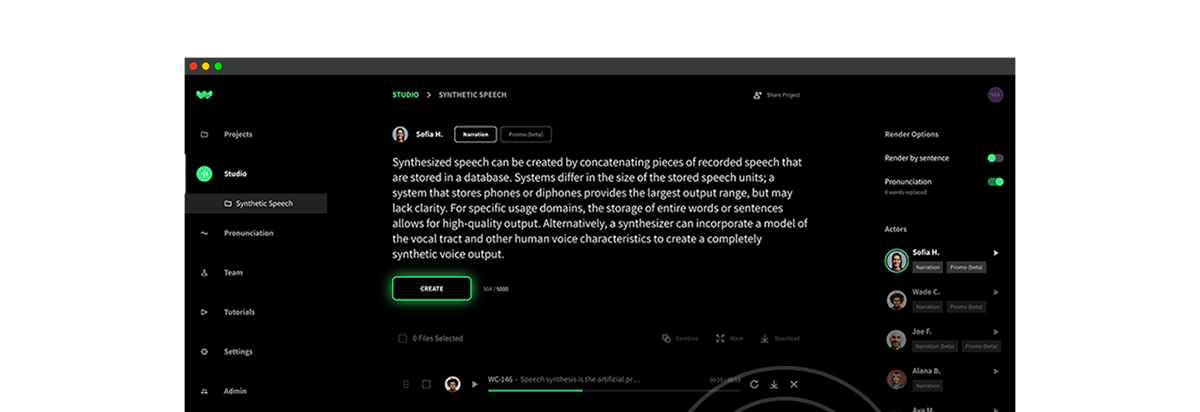

At WellSaid Labs, we’re excited about the introduction of the SSML <say-as> tag in our V10 API model. This feature represents a leap forward in our mission to provide nuanced and precise voice outputs. As such, let’s further explore the mechanics of SSML and the capabilities of the <say-as> tag, demonstrating how this new feature will benefit the way we interact with synthetic voices.

Understanding SSML

SSML is akin to the HTML of speech synthesis. It’s a standardized markup language that provides a route for giving detailed directions to TTS engines around how to read text aloud. From modifying pitch, speed, and volume to inserting pauses and emphasizing certain words, SSML allows for a tailored speech output that can mimic natural speech patterns closely.

The Role of the <say-as> Tag

Why do we bring up SSML? Our brand new <say-as> tag has just been released. It comes as a cornerstone in the SSML framework, specifying how a segment of text should be interpreted and pronounced.

This tag is essential for scenarios where the default speech synthesis approach may not align with the intended meaning of the text, such as reading sequences of digits as telephone numbers, dates, or cardinal numbers.

Feature overview

The integration of the <say-as> tag in our V10 API model provides developers with the ability to guide the TTS engine in understanding the context of the text, ensuring that it is read out in the intended manner. This feature is crucial for achieving more accurate and lifelike voice output, particularly in applications where precision in speech is paramount.

With the introduction of this feature, we aim to resolve common issues faced by developers, such as incorrect interpretations of numerical sequences or addresses. The <say-as> tag offers a solution by allowing developers to specify the desired format, ensuring the output matches the intended auditory presentation.

Milestones and future directions

We’re beginning by supporting a variety of interpret-as values, encompassing essentials like addresses, dates, and telephone numbers. These will initially focus on US-centric needs before expanding globally.

The roadmap includes adding more formats and adaptability to cater to a broader audience, aligning with our commitment to continuous improvement and user satisfaction.

Looking forward

The SSML <say-as> tag contributes to our broader narrative of progress and precision in the field of speech synthesis. At WellSaid Labs, we’re creating more than synthetic speech. Rather, we’re crafting experiences that resonate, communicate, and inspire.