Audio by Joe F. using WellSaid Labs

From deciphering dinner recipes to finding that oddly specific 80s song we can’t get out of our heads, AI’s taken the co-pilot seat in our modern lives. It’s our go-to for work queries, passion projects, and those moments when we just can’t recall the lead actor in that movie. You know the one.

Usually, a well-crafted prompt to our AI sidekick gifts us the golden nugget of wisdom we’re after. But occasionally, instead of gold, we unearth… a rubber chicken? Irrelevant, often hilarious, and utterly fabricated, these “rubber chicken” moments are what we affectionately (or not-so-affectionately) call AI hallucinations.

By definition, an AI hallucination is when an AI system, aiming to be oh-so-helpful, ends up spinning a yarn that’s less fact and more fairy tale. It’s like asking for directions to the nearest coffee shop and getting a narrative about elves brewing lattes.

Now, here’s a shocker: 96% of our online buddies are familiar with these digital mirages, with a whopping 86% having taken an unexpected trip down AI’s make-believe lane.

Here’s an example from my personal experience. I put Chat GPT-4 in the hot seat with a query about “Queen Starlight Strawberry Sparkles”, and it coyly dodged the question, citing its training cut-off. But once tossed into the GPT playground, I was on my way to learning all the facts about a rainbow-maned pony.

This free-reign-in-the-playground approach, adopted by many white-labeled GPT versions, can lead to a parade of tall tales without the checks and balances. And while this might be fun for a whimsical jaunt, the proliferation of these fictional tales in more serious contexts can be problematic.

For instance, Stack Overflow, the techie’s sanctuary for coding queries, had to impose a time-out on ChatGPT-fueled answers. Why? Turns out, ChatGPT is great at sounding knowledgeable. Yet, it still occasionally flubs on the accuracy front.

The chatter about these AI daydreams is buzzing louder than a caffeinated bumblebee. So let’s delve into the hows and whys of these AI slip-ups, ponder on their implications, and arm you with tools to keep your AI interactions more factual and less fantastical.

Stay with us. It’s about to get real (100% hallucination-free, of course)!

💡Discover how AI and synthetic voice can accelerate your revenue

The art of AI bluffing

AIs: the modern-day mystery boxes. Though parts remain enigmatic, we’ve pinned down why they sometimes seem more fiction writers than factual assistants.

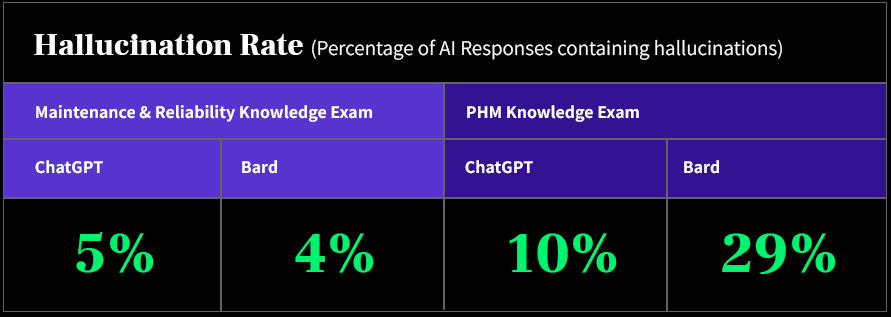

For one, AI hallucinations aren’t a digital fever dream. They’re often the system’s attempt to offer a satisfying answer, even when clueless. Surprisingly, in maintenance and reliability exams, AI goofed up 4% – 5% of the time. But the PHM? A staggering 10% – 29%!

Wondering why? Here’s the scoop.

Data drama

If AI had a diet, it would be data. And that data comes from reputable sites like Wikipedia to the wild west of Reddit. But like any diet, quality matters. Feed it junk, and, well, you get junk. The vast sea of the internet harbors plenty of debris of misinformation, biases, and yes, even hate speech.

Chatbots, in their thirst for knowledge, guzzle it all down. And when they regurgitate, sometimes it’s a mishmash of truths, half-truths, and, well, pure fantasy. Thus, the term “hallucination”—where their output might veer into the absurd, irrelevant, or just plain wrong.

Lost in translation

Throw some idioms or slang their way, and watch the fireworks! If an AI isn’t familiar with “spilling the tea” or “it’s raining cats and dogs”, you might end up with some amusing, nonsensical outputs.

Overly friendly dogs and overthinking AI

There’s a term for when we look at our dog, who just made fleeting eye contact and go, “Oh, what a genius!” That’s anthropomorphism. AKA–our knack for attributing human qualities to non-human entities. If a computer starts crafting sentences akin to Shakespeare, we’re duped into believing it’s penning down sonnets in its free time. Fun fact: A Google engineer was so convinced that their AI achieved sentience, they took a paid leave after their claims were dismissed. Spoiler alert, they didn’t return to their desk.

Overfitting overdrive

Think of an AI as that student who crams only the night before the exam. They remember everything verbatim but fail in applying the knowledge in a new context. With limited datasets, AIs might just memorize responses, making them ineffective in fresh scenarios. Hence, the hallucination carnival begins. After all, they’re not as smart as they may have you think.

Sneaky shenanigans

And sometimes, it’s pure mischief. Certain prompts are crafted just to trip up the AI. Like asking someone a riddle they’ve never heard before. It’s all fun and games until someone gets a wonky answer.

So, next time you find yourself chatting with an AI and receive an answer that seems like it’s plucked from a sci-fi novel, take a moment to appreciate the intricate dance of data and algorithms. It’s AI trying its best, even if it sometimes gets lost in its own digital daydreams.

When AI fibs affect reality

Alright, lean in folks, because this bit might just give you some techy trust issues.

So, you’ve had a laugh or two when ChatGPT took a wild guess about unicorns running for president. “Just AI being its quirky self,” you thought. But here’s where the fun and games start getting a tad concerning.

A mind-boggling 72% of users, perhaps just like you, place their trust in AI to serve up the straight facts. But here’s the plot twist: a staggering 75% of this trusty brigade have been led astray by AI’s fabricated tales. That’s right, ¾ of us have been hoodwinked by our own digital assistants!

But why the fuss over a few digital hiccups?

Well, it’s not all about having a giggle at a rogue robot response. These AI daydreams are weaving themselves into the broader tapestry of concerns surrounding artificial intelligence.

Trust is a major concern. If we can’t bank on AI for the truth, where does that leave our growing reliance on these systems? With every inaccurate “fact” an AI presents, a brick is taken out from the wall of trust we’ve built around it.

Throw bias into the mix, and an unchecked hallucination is concerning. If taken at face value, these blunders can reinforce biases, perpetuate stereotypes, or even cause real harm. Your AI might not mean any harm, but its misguided musings can inadvertently push harmful narratives.

💡Learn about WellSaid’s commitment to ethical AI here

In addition, there’s the whole echo chamber of it all. Picture this—AI systems that continue to feed on the vast buffet of the internet, which let’s face it, isn’t all gourmet content. There’s a lot of junk food in the form of misinformation, biases, and, dare we say, fake news. The result? A vicious cycle where the AI keeps feeding on and then presenting the very falsehoods we’re trying to weed out.

So, while it’s fun to chuckle at an AI’s random musings about Martian colonies or chocolate rain, it’s essential to understand the broader implications. AI hallucinations can be quirky, but they’re also a real signal flare. One that reminds us to approach the age of information with a discerning eye.

After all, we don’t want our digital future to be based on tales taller than the beanstalk Jack climbed, do we?

How you can steer AI back to reality

Here’s a silver lining—while AI hallucinations can be baffling, we still have agency over controlling them. And even better? We’ve got the intel on how you can curb those AI daydreams and steer your bot in the right direction.

But, first, let’s get our stats straight. A cheeky 32% of users trust their gut (old school, but it works!) to spot when AI’s got its wires crossed. Meanwhile, a more tech-savvy 57% play digital detective, cross-referencing with other sources. Still, why react when you can prevent?

Taking a page from the art of “prompt engineering”, these tips will help.

🛝 Limit the playground: If you let a toddler in a toy store without direction, chaos ensues. Same with AI. Guide its thought process. Instead of an open-ended query like, “Tell me about the universe?”, ask, “Is the universe constantly expanding? Yes or No?”.

🗺️ Design a blueprint with data templates: Think of GPT as that student who needs clear instructions. Instead of a vague question, give it a table or data format to follow. So, for instance, instead of saying, “Calculate the growth percentage,” provide a table with values from Year 1 and Year 2, and then ask for the percentage growth.

📚 The grounded approach: Offer vetted data and context. It’s like giving your AI a trusted encyclopedia to reference. A prompt in this manner would look like: “Using the sales data from Q1 2023 where we sold 5000 units and Q2 2023 where we sold 5500 units, calculate the growth rate.”

🎭 Assign a role and set the stage: Tell your AI it’s an expert in a field, and watch how it takes its role to heart. Unsure where to start? As an exercise, try prompting GPT with “Pretend you’re Shakespeare. Now, craft a poetic sentence about sunrise.”

🌡 Control the thermostat: Dial down the “temperature” setting if you want your AI to play it safe, or turn it up for wilder (and possibly hallucinatory) answers. At a lower temperature, you might get, “Paris is the capital of France.” Crank it up? “Paris, the city where croissants dance in the moonlight!”

💬 The direct approach: Talk to your AI like it’s a slightly forgetful friend. State what you’re after, and make it clear when you’d rather it admits ignorance. And this could sound something like: “List out the primary colors without mentioning any fruit. If you’re unsure, just let me know.”

In a nutshell, guiding our AI pals might take a tad more effort, but the crisp, clear, and hallucination-free results? Totally worth the effort.

Our AI-guided future–where we go now

In truth, AI is our digital Robin to every Batman endeavor. Yet we still must recognize and rectify its hallucinations for a trustworthy digital future.

Now, let’s crystalize what we’ve unearthed: AI’s daydreams can be curtailed. By crafting sharper prompts, grounding our interactions with verifiable data, and essentially steering our AI comrades, we can significantly minimize those quirky (and occasionally misleading) detours. Keep in mind–it’s about forging trust in an era dominated by data-driven decisions.

Luckily for us, the tech titans are already stepping up. OpenAI, always the diligent student, has harnessed human feedback through reinforcement learning to groom ChatGPT into a sharper, more discerning entity. Microsoft, with Bing chatbot in its armory, opted for a different route—shorter interactions. It’s like setting a word limit on a chatty friend. Not a bad idea. 🤔

Yet, even these behemoths concede: taming the vast seas of AI’s creative surges is challenging. Patching every hallucinatory leak? That’s a Herculean task.

Even in our AI-driven age, technology, in all its brilliance, is still a tool. It’s a reflection of our instruction and data. As AI continues its dance between cold computation and imaginative jaunts, we must ask ourselves how we can ensure accuracy without stifling creativity. After all, in the balance between fact and fiction, our guiding hands play the pivotal role.