Voices are shaped by so many factors. Our upbringings and cultural backgrounds shape which languages we speak, with our roots linked to certain dialects and sentence structures. Education and entertainment play their part, shifting our slang and expanding our vocabulary. Even within the constrained boundaries of neighborhoods or cities, people speak differently across them. When we try to implement speech into our technological lives or voice user interfaces, that range of voices multiplies by state, country, region, and continent.

What’s next for VUI?

As communication companies and tech brands build up speech interfaces with their devices, they have big challenges to face. This innovative industry has room to grow and expand as more and more people have access to phones, computers, smart appliances, and devices. Speech is becoming an integral part of how we interact with technology.

In 2020, there were 4.2 billion devices around the world with voice assistants, and this number is likely to double by 2024. Software designers and engineers have to carefully consider how to improve and expand the ways people speak to these systems, called Voice User Interfaces. In this article, we discuss what a Voice User Interface is, what makes a voice UX unique from a visual one, how designers can create a well-designed Voice User Interface, and challenges the industry faces.

What Are Voice User Interfaces?

Voice User Interfaces, or VUIs, enable users to control and give feedback to systems using voice or speech commands. As this technology has massively expanded in recent years, voice commands have quickly become a normal interaction for many people. There are some clear advantages of communicating with technology by voice or text-to-speech, as compared to more traditional methods such as typing into a keyboard or phone.

Voice User Interfaces allow people to interact with their phone, smart device, or computer while staying hands-free. You can set a reminder while driving without ever taking your eyes off the road. Even though it might already seem normal to some people, simply speaking a command while busy cooking or working out would seem like the height of science-fiction 20 years ago. This can also be massively beneficial for people with mobility problems or physical limitations. VUIs can make technology more open and accessible than ever before.

Voice User Interfaces Are Everywhere

Many of the most popular electronics today have Voice User Interfaces. Apple has Siri working across Apple’s entire suite of products, while Google Assistant and Amazon Alexa are also popular systems built on a VUI. There is a vast spectrum for Voice User Interfaces, ranging from more specified programs to all-purpose virtual assistants. Many people now assume that a phone or computer will come packaged with some version of a VUI. In fact, 72 percent of interactions with voice technology are through a digital assistant.

As the public becomes more used to addressing their technology out loud, VUI spread will be exponential. There are many benefits to the widespread availability of voice user interfaces. VUIs could be programmed to recognize stress levels in voices or intervene when difficult questions arise. Some assistants could direct users to important resources and helplines when needed. Systems can enable a user to reach emergency services or quickly attain vital information without moving an inch. As VUIs become more powerful and complex, they will be able to solve larger problems and perform a wide variety of tasks.

Incredible Room for Innovation with VUI

While many people are used to the simplified commands and inputs used by keyboards or phones, voice interfaces have a steeper challenge to overcome for success. It is rare that people are fully conscious of how they express thoughts and communicate vocally. Sentence structure is not always predictable and there are so many ways to communicate the same ideas. While this presents unique and unpredictable challenges for VUI designers, it is also why this field is at the forefront of technological innovation today.

What Makes a Voice UX Different Than a Visual One?

If your entire system is based around visual prompts and cues, there are specific limitations around what you can create and improve. You can streamline applications and design, but there is only so much that can be done with visual displays alone. This is why voice user experiences (UXs) are so beneficial. By expanding the possible ways a user can input commands, receive feedback, and communicate with their devices, designers have more leeway.

Voice UX is not just about giving a command and the device responding. Rather, VUIs can absolutely have a visual component. A flashing light can let you know that your computer is listening. Siri, for instance, gives a visual indicator on screen when someone says “Hey Siri”. Amazon’s devices, following a similar logic, integrate flashing lights into their devices. This gives a user confidence to start speaking. In some situations, however, visual feedback is not enough. If you are running or driving, for instance, you may not see your screen for the entire interaction. This is where other cues are vital.

How do VUIs work?

VUIs also use tactile and auditory feedback to communicate with users. Some phones or smart devices vibrate when a command has been registered or a spoken task is complete. As an owner becomes more familiar with their VUI device, these physical responses can become second nature, allowing users to immediately feel their device working and listening.

As well as visual and tactile cues, voice UX can take full advantage of auditory feedback. This can range from simple to complex responses. A tone or beep can notify a user, while an AI-generated voice can also relay information and create a dialogue between a system and a person. With Apple’s Airpods Pro, for instance, different tones are used to indicate which mode the headphones are in. Just by the sound choice, the options and settings are clearly defined. These subtle solutions help devices with few physical buttons or inputs to stay customizable and easy to use. Since there are so many different ways for a VUI designer to help a user feel comfortable and connected to their device, it allows for a much greater level of customization and creativity.

How Do Designers Approach Voice UX?

Exceptional voice user interfaces begin with exceptional designers and engineers. Engineers can only build these systems with many stages of creation, testing, trial and error. Voice designers aim for seamless interactions between users and voice applications. Their VUIs need to be performing many steps at the same time, deciphering the meaning of each phrase while searching for solutions and actions to initiate. As designers approach this challenge, there are several distinct phases of development and implementation of voice user interfaces.

Initial Design

There are many different options, styles, and functions for voice interfaces. Designers must research available options and structures before they begin to develop their VUIs. What purpose or audience is the VUI being designed for? What technology, hardware, or device will communicate with the user? Below are just some of the options:

- Phones and tablets

- Computers and laptops

- Smartwatches and other wearables

- Connected televisions and entertainment devices

- Smart speakers (Alexa or Google Home, etc.)

- “Internet of Things” appliances and tools (fridges, locks, thermostats, etc.)

Once a business decides on the application and purpose of the VUI, the advantages and limitations of the hardware will determine what visual, tactile, and auditory feedback are used. The tone of the system’s voice is also important to decide early. Some devices require a direct and succinct approach while others (like voice assistants) aim to be more conversational. As these design decisions take shape, research must also be done on the intended user base for the VUI.

Research and User Personas

Voice designers should always be aiming to solve user problems. Even for devices with a wide user base, like phones and computers, detailed research can help fine-tune the voice assistant’s design. By understanding a user’s motivations, problems, and modes of input, a designer can focus on the most important aspects of a VUI.

Who is using this device? What will their most common questions and commands be? What are easily missed edge cases? By asking these questions, designers can set themselves up for success. Human behavior may seem unpredictable, but through data and analysis, patterns can be mapped out and better understood. This will help the VUI function smoothly for the greatest number of users. This research and planning stage can also help avoid redesigns and dead ends further along into development.

As you research your potential base, create a User Persona. Basically, this is a fictional amalgamation of an ideal user. A good voice designer needs empathy, understanding how users will interpret and utilize their voice interface. With an unclear image of one side of a conversation, a voice designer will not be able to gain a full picture. Details in this space are so important. The tone of the voice can affect how a person interprets the tone of the conversation. A VUI that sounds robotic or demeaning will be far less successful, even if it gives correct answers to commands. A voice that sounds human will be far more effective. Taking the time to define a thorough and fact-based user persona can set a project up for success in the long run.

Prototype Conversations

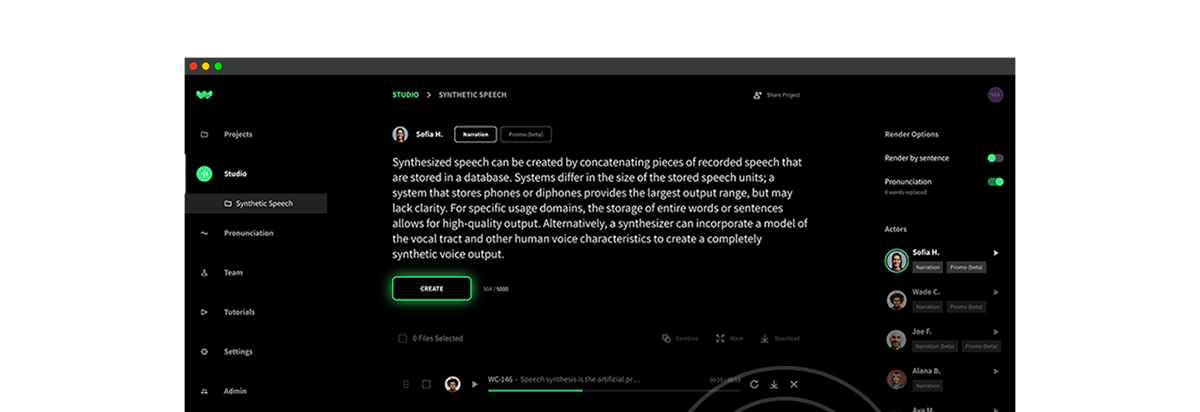

With a solid foundation for the project in place, VUI designers can begin writing the prototype dialogue. This is where successful VUIs emerge, all beginning with simple commands and responses. The flow of the conversation should guide users from question to question. When a device solely relies on visual design, it is easy to force users to follow particular paths, laid out in text fields and buttons. With spoken words and phrases, that level of concrete guidance is impossible (especially if the designer is aiming for naturalistic dialogue). Potential misunderstandings and errors can only be found by repetition and mapping out possible forks in the dialogue. Thankfully, software exists that can help streamline this process, laying out each dialogue flow. If done manually, it can greatly lengthen the process overall.

There are some major challenges here that designers have to overcome. People do not always think or speak in linear ways. A user may give a command like: “I need a place to stay in Denver on June 1st. A hotel would be best.” A VUI will need to pull out the relevant data and questions, regardless of their order in the question. On a booking website, options are laid out visually in a specific order. But sentence order and structure are almost infinitely variable. Most computing systems are designed to follow logical paths, with clear branches and options. But using a speech-based input adds more challenges to this kind of software.

Testing and Refinement

After a designer writes and maps out the basic dialogue flows, testing and refinement are vital to give users the best possible voice UX. In general, there are two main types of VUI testing.

User Testing involves asking the initial testers and users questions, checking in with their needs and expectations. This can be very useful, since a designer can address representatives of their user base directly. But this type of testing also has limitations based on a person’s conscious feedback. This is where the other type of testing shines.

Usability Testing is based on observing the actual ways that users interact with the voice interfaces on their devices. This can be time-consuming, involving additional participants and methods of recording usage and data. But it is important for finding potential missed opportunities and pitfalls in the voice design.

Through this testing and feedback, designers can refine the VUI through repeated iterations. Future updates can incorporate additional test data, helping the voice interface continuously improve. A VUI that a designer creates in a vacuum, solely based on their own assumptions, will not be as applicable or effective for a wide user base. Especially in a field developing and changing so rapidly, designers can continuously improve and expand their VUI systems.

How Does a Well-Designed VUI Sound?

Overall, a user’s interaction with a voice interface should follow a basic order. This can be expanded or repeated based on the circumstances. Designers should keep the following system in mind, as it provides a solid framework to build up an effective VUI.

Input

Starting with a trigger word or phrase, a user can initiate dialogue with their device. Phrases like “Hey Siri” or “Hey Google” are famous examples. Then the device should give an opening cue, signaling to the user that the voice interface is ready and listening. This is where a designer can choose or combine visual, tactile, or audible elements to communicate. This cue needs to be clear. It can be a very frustrating experience if the speaker is not sure when the phone (or other device) has started listening. Questions can get cut off and need repeating, reflecting poorly on the voice assistant. At the input stage, the VUI should be listening, receiving a command or query from the user.

Thinking

As the voice interface is listening, it can give feedback. If the device has a display, the system can display the dialogue for the user to check. If screen real estate is limited (on a smart watch, for example), a small symbol or wheel could show that the voice assistant is paying attention and recording every word spoken.

Once the user pauses, the VUI needs to end its active listening period with a closing cue. With a beep, disappearing symbol, or tone, the interface should signal that it is now considering its answer. The user should never be in doubt of where they are in the conversation. The VUI will process for a moment, preferably as quickly as possible.

Output

The final stage of the conversation is a response from the VUI. By giving more information, offering a link, setting a reminder (or a myriad of other possibilities), the voice interface should now answer the user. Here, the flow can begin again if the customer wants to ask another question. By keeping things natural and fluid, this process can repeat as many times as is necessary. Listening and responding with clarity, a good VUI can create a great experience for the user.

Below is a real-world example of this flow.

“Hey Siri?” A logo appears on the device (giving a visual opening cue) while a chime also sounds. “What time is it in London right now?” The text of the question appears on the screen. After a pause to consider, the logo disappears (closing cue) and the VUI responds: “The time in London is now 2 p.m.” The answer also stays on the screen afterwards, giving the user time to read and absorb the information.

The example above may seem simple, but this flow of input and feedback creates a solid base for a voice interface, making a user more comfortable talking to their device. A user should never be wondering if their device is listening. Clarity is key when it comes to effective voice interfaces.

Now that we’ve reviewed the design and workflow of quality VUIs, let’s consider some potential challenges that designers face.

Challenges for Voice User Interfaces

Especially in a newer field of technology, there are some difficult hurdles to cross. Designers should be aware of these issues and intentionally work to help users feel comfortable relying on the VUIs on their devices.

Privacy and Security

Some users may feel uncomfortable or hesitant to use devices that are constantly listening and recording them. Obviously, if they do not consider the VUI to be secure and safe, it is unlikely the system will become a part of their daily lives. Designers and companies should be as transparent as possible, making it clear when devices are listening and what happens to the collected data. Some settings and options can also be helpful here. Some devices have options between being constantly listening for the trigger phrase and only switching on when a button is pushed. This compromise can help give hesitant users control and build trust around the VUI.

Speaking in Public

For some users, there is still a social stigma around speaking to your device in public. Actually, 62 percent of users state that they feel awkward using voice commands in public. This will most likely change over time, as VUIs become better, more efficient, and more widely adopted. As people lean more heavily on their voice assistants and tools, it will be less awkward for everyone to use them in shared spaces. Especially when voice interfaces are much faster than typing (with a high rate of understanding), the benefits can outweigh the awkwardness and make VUIs more socially acceptable.

Accuracy and Speed

It can be frustrating to begin a dialogue with a voice assistant, speaking the trigger word and clearly giving a command, only for it to fail. If a VUI has to ask again or interprets the question wrong, users may avoid using the tools. While a text interface is slower and more awkward, there is less room for misunderstanding. These errors also slow the entire process down.

As many people have experienced, it is demoralizing to spend more time getting an Alexa to understand a request than to perform the task or find the information yourself. This is why testing is so important. By iterating many versions of a VUI, finding edge cases and refining the software, a designer can avoid as many errors and misinterpretations as possible. By creating a smooth voice UX for the user, this technology can continue to grow and become even more popular than it is today.

Listen for the Voice User Interfaces of the Future

As workplaces change and our daily schedules become increasingly digitized and mediated by tech, voice interfaces may take a central place in many more people’s lives. By understanding the foundations included in this article, a designer can set themselves up for success.

And, while these recommendations and examples can be useful tools for creating an effective VUI, keep in mind that this field is constantly in flux. It’s important to keep an ear to the ground to listen for the research, innovation, and possibility ahead.