Audio by Theo K. using WellSaid Labs

There’s no denying it: AI is taking the world by storm. But while the rapid advancements are exciting, there’s a pressing conversation that needs to happen alongside it. Yup, we’re talking about ethical AI. While ethical AI discussions may seem new, the concept has roots as far back as 1942. But it’s only now that we’re truly grappling with its broader implications.

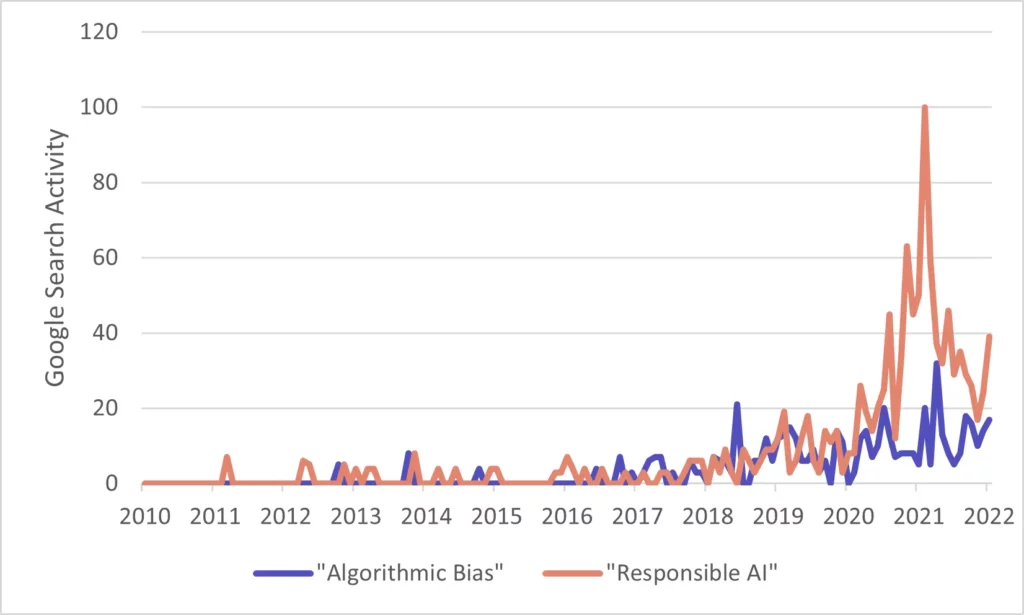

Curious about how much traction the ethical AI conversation is gaining? A notable increase in related online searches gives a hint.

The importance of ethical AI stretches from areas like copyright protection and legal concerns to addressing potential biases. Historically, technology tends to amplify our human flaws. However, this rise of AI offers a chance to course-correct and build systems that are fairer and more responsible.

💡Discover WellSaid’s ethical commitment here

Here’s a thought to mull over: by 2024, more than 1/2 of large enterprises will be integrating AI. What’s more, instead of taking away jobs, and contrary to pervasive automation anxiety, AI is actually projected to generate 133 million new roles by 2030. With this massive growth, the need for an ethical framework becomes paramount. If we’re to harness AI’s potential without repeating past mistakes, a solid foundation rooted in ethical practices is non-negotiable.

How does that saying go again? Those who cannot remember the past are condemned to repeat it.

To better understand this blend of tech and ethics in action, we’re turning the spotlight on standout companies championing ethical AI practices. So whether you’re knee-deep in the tech world or simply an interested observer, join us as we dive into the actions, best practices, and lessons from these industry leaders.

Let’s get to it!

Player 1: WellSaid Labs

When we’re on the hunt for shining examples of ethical AI practices in the wild, it’s hard not to turn our gaze inward towards WellSaid Labs. Nestled within the very core of WellSaid Labs is a profound commitment to ethical practices. This ethos isn’t something we’ve merely tacked on. It’s woven into our organizational DNA.

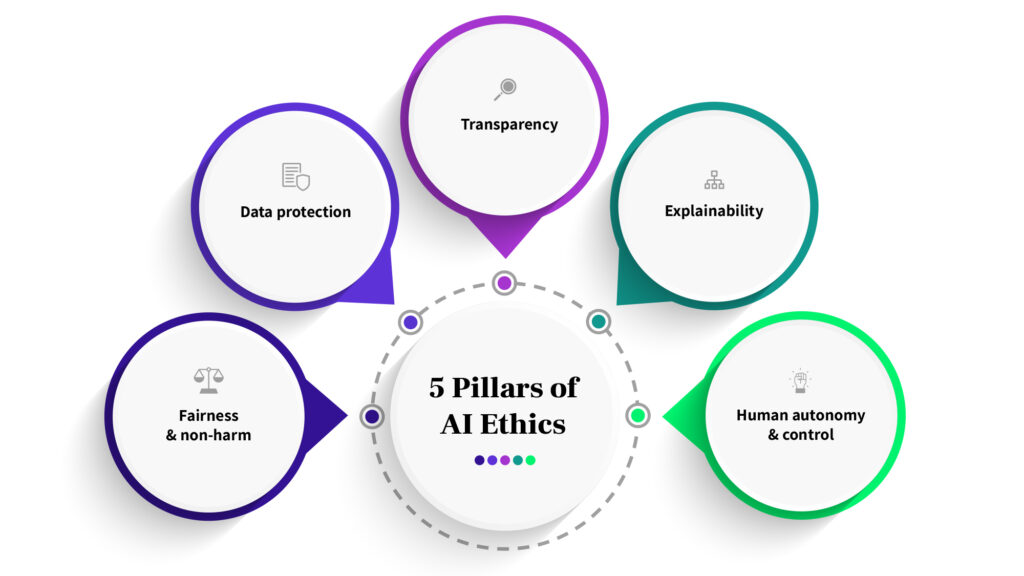

Let’s break it down using the 5 essential AI ethics principles:

Fairness and non-harm: At WellSaid Labs, the term “bias” isn’t taken lightly. We’ve set up robust mechanisms to continually monitor and tweak our models, rooting out biases wherever they lurk. The cherry on top? Our strong emphasis on content moderation ensures that the word “fairness” is a lived reality.

🌟 Key lesson: Vigilance is the key to fairness. Constantly monitoring and refining AI systems can make all the difference.

Data protection: With cyber threats looming larger every day, data protection is non-negotiable. Recognizing this, WellSaid is relentless in meeting benchmarks, ensuring that users’ data remains locked up tighter than Fort Knox.

🌟 Key lesson: Achieving and maintaining compliance benchmarks is a testament to a company’s commitment to user data protection.

Transparency: WellSaid Labs holds the belief that open communication forms the bedrock of trust. This goes beyond spewing technicalities—it’s about making sure voice actors know exactly how their data is being used, with explicit permissions taking center stage.

🌟 Key lesson: Openness fosters trust. When users know the how and why of data usage, confidence in the system grows.

Explainability: Beyond just churning out results, WellSaid Labs is dedicated to the why behind them. Meaning, we’re ALL about understanding the nuances of human voices. As such, our deep dives into insights, like the details of our Audio Foundational Model (AFM), reveal a commitment to bridging the gap between tech and humanity.

🌟 Key lesson: Making AI understandable is as crucial as making it functional.

Human autonomy and control: Here’s where WellSaid Labs really shines. We’re staunchly against voice cloning without consent. Plus, we empower users with extensive customization options, even during beta testing, ensuring that users always have the reins.

🌟 Key lesson: Empowering users to customize their AI experience ensures a sense of control and autonomy.

In the grand scheme of AI, WellSaid Labs stands out for both its tech prowess as well as our unwavering commitment to ethical practices. As we pave the way, the hope is that many others in the AI world will be inspired to walk the ethical path too, ensuring a future where technology and humanity coexist harmoniously.

Player 2: Microsoft

In the vast universe of tech giants, Microsoft has always been a constellation that shines particularly bright. But in recent years, they’ve added another feather to their already illustrious cap: a pioneering approach to ethical AI.

Let’s decode Microsoft’s venture into the realm of responsible AI.

Purposeful pledge: Microsoft’s ethos is clear—they’re not just in the game of creating AI. Rather, they want it to be responsible and lasting. The Microsoft Responsible AI Standard principles serve as the pillars shaping how Microsoft conceives, crafts, and critiques its AI models.

🌟 Key lesson: Embedding ethical principles at the inception of AI development ensures a consistent and dedicated approach.

Global collaborations: Microsoft actively joins forces with researchers and academics the world over. The goal? To propel the cause of responsible AI further and faster, ensuring innovation without compromising safety.

🌟 Key lesson: Collaborative efforts multiply impact. Pooling global knowledge can revolutionize responsible AI practices.

Ethical infrastructure: Dive into Microsoft’s internal workings, and you’ll find dedicated bodies like the Office of Responsible AI (ORA), the AI, Ethics and Effects in Engineering and Research (Aether) Committee, and the Responsible AI Strategy in Engineering (RAISE). These entities are at the frontline, translating ethical principles into tangible actions.

Guiding principles: Microsoft’s commitment to responsible AI is anchored in robust ethical principles: fairness, reliability, safety, privacy, security, inclusiveness, transparency, and accountability. Their approach is strikingly people-centric, ensuring that the human touch remains preserved in the algorithmic maze.

🌟 Key lesson: An exhaustive list of ethical principles, when implemented sincerely, ensures that AI remains a boon.

Empowering others: Microsoft’s vision isn’t insular. They’re on a mission to help other organizations incorporate ethical AI, guiding them from initial implementation to governance. With a treasure trove of tools, guidelines, and resources, Microsoft ensures that organizations achieve ethical AI practices efficiently.

🌟 Key lesson: Ethical AI is largely about about elevating the entire ecosystem.

In a nutshell, Microsoft isn’t merely tinkering with AI in a lab. They’re forging a future where AI and ethics are deeply intertwined realities.

Player 3: Fiddler

We live in a world rapidly transforming with AI, but with great power comes great responsibility. Enter Fiddler: a company not just riding the AI wave but ensuring that it’s steered ethically.

Model maintenance, simplified: With AI models becoming increasingly integral to enterprises, monitoring their performance is non-negotiable. But the challenges are manifold—from generative AI models to predictive ones. Often, teams find themselves lost without the right tools, spending inordinate amounts of time troubleshooting. Fiddler eliminates this hassle with its unified management platform that offers centralized controls and actionable insights.

🌟 Key lesson: Ensuring ethical AI is about the processes, too.

A comprehensive AI toolkit: With Fiddler, the vision is clear—AI should be fair and inclusive. To achieve this, they’ve put forth a monitoring framework that provides a panoramic view into model behavior. Insights are consistently deep, offering explanations and root cause analysis. Spotting performance glitches becomes almost instantaneous, nipping potential negative business ramifications in the bud.

🌟 Key lesson: Transparent AI is effective AI.

Bringing ethics front and center: Let’s face it—ensuring fairness in AI models can be like finding a needle in a haystack if you’re in the dark about model behavior or prediction rationales. Fiddler throws a spotlight on this, allowing precise detection of biases, not just in ML models but also datasets. It’s all about demystifying the black box of AI and making it more human-understandable. By enabling the deployment of robust AI governance and risk management processes, Fiddler ensures AI doesn’t just replicate past biases but paves the way for fairer outcomes.

🌟 Key lesson: Fairness in AI is a continuous journey, demanding constant vigilance.

Tools for today and tomorrow: Fiddler offers a highly scalable solution. Their tools evolve, fostering advanced capabilities over time. From automating prediction documentation to enabling human input in ML decisions, Fiddler understands that trust in AI comes from transparency. And speaking of transparency, how about digging into hidden intersectional unfairness? Or using metrics like disparate impact and demographic parity to keep fairness in check? With Fiddler, all this and more isn’t just possible—it’s a reality.

🌟 Key lesson: Building ethical AI is an evolving endeavor, demanding tools that can pivot, scale, and adapt.

To put it succinctly, Fiddler is sculpting a future where AI aligns seamlessly with human values, ensuring businesses don’t just profit but also progress. As the landscape of AI continues to unfold, companies like Fiddler are a testament to the fact that it’s entirely possible to combine cutting-edge technology with time-honored ethics.

Player 4: IBM

When we talk about pioneers in the tech world, it’s almost impossible to have that conversation without mentioning IBM. However, in today’s rapidly-evolving digital landscape, being a pioneer requires ensuring those technologies are ethically sound. And this is where IBM shines brilliantly.

Building on trust: For IBM, trust is the bedrock of their AI pursuits. With their staunch commitment to ethical principles, IBM aims to create AI systems that are extremely trustworthy. They get it. AI’s potential is infinite, but it’s only as good as the trust we place in it. In a revealing survey conducted by IBM, a whopping 78% of senior decision-makers emphasized the critical importance of trusting AI insights, but only if they’re fair, safe, and reliable.

Key lesson: Trust is the currency of AI. Earn it, and the possibilities are endless.

Pillars of ethical AI: It’s one thing to talk about ethical AI, but quite another to practice it. IBM breaks it down into five tangible pillars: explainability, fairness, robustness, transparency, and privacy. Each of these pillars is a commitment, shaping how IBM approaches and creates AI systems. From making AI understandable (explainability) to ensuring its resilience (robustness), IBM is leaving no stone unturned.

🌟 Key lesson: Ethical AI can be more simple than you may realize. Break it down, make it tangible, and then practice what you preach.

Center of Excellence for Generative AI: With the recent unveiling of their Center of Excellence (CoE) for Generative AI, IBM is ramping up their commitment to ethical AI. With an army of over 1,000 consultants, all well-versed in generative AI expertise, IBM is on a mission to revolutionize core business processes. Be it supply chain dynamics, finance intricacies, talent management, or enhancing customer experiences, IBM’s CoE is set to be a game-changer.

🌟 Key lesson: Commitment to ethical AI demands resources, expertise, and relentless innovation.

AI Ethics Board and IBM Research: Institutionalizing ethics is perhaps one of IBM’s masterstrokes. The IBM AI Ethics Board, a cross-disciplinary entity, actively fosters a culture steeped in ethical, responsible, and trustworthy AI, influencing IBM’s policies, practices, and even their research and products. IBM Research, meanwhile, is relentless in its pursuit of AI solutions that are trust-centric.

🌟 Key lesson: When it comes to ethical AI, it’s vital to walk the talk at every institutional level.

IBM stands as a shining example that technological innovation and ethical considerations can go hand-in-hand. In their quest for responsible AI, they’re showing the world that with the right principles, the AI-driven future can be both brilliant and benevolent.

Player 5: Accenture

In the vast world of AI, if there’s one company that’s steadily emerged as the vanguard of ethical practices, it’s Accenture. They’ve recognized that, while AI is shaping the future, it’s also hugely about the ethics and transparency of its deployment.

Trust, a rare commodity: According to Accenture’s 2022 tech vision research, trust in AI is somewhat of an endangered species. A startlingly low 35% of global consumers trust how AI is being implemented by organizations. That’s a rather minuscule slice of the pie. But here’s the kicker—77% believe that organizations should face the music if they misuse AI.

🌟 Key lesson: It’s not enough to just implement AI. It’s how you do it that counts. And the world is watching. Closely.

Practicing excellence: The CoE isn’t just a fancy title at Accenture. It’s a veritable powerhouse. This Generative AI and LLM Centre of Excellence is a colossal entity boasting a whopping 1,600 employees. And it’s not just about quantity but quality—with a significant portion of this talent pool positioned in India, a burgeoning tech hub. This regional focus underscores Accenture’s commitment to global capability building.

🌟 Key lesson: When building a Centre of Excellence, go big or go home. It’s about both the resources themselves and how you strategically position them.

Training galore: Accenture’s on a mission to arm their troops with the knowledge and expertise of AI. An additional 40,000 employees are now AI-literate, all prepped to back the CoE. This mammoth training initiative is a testament to Accenture’s commitment to capacity building.

🌟 Key lesson: Having AI technology is one thing. Having an army of experts to wield it is another. Invest minds–not just tools.

In the grand tapestry of AI, Accenture is weaving a narrative about conscious and responsible innovation. As they meld tech and ethics, they’re challenging the rest of the industry to meet it.

Player 6: Parity AI

Imagine being stuck in a room where everyone is speaking a different language. You might catch a word here and there, but understanding the full message? Almost impossible. That’s what it felt like for Rumman Chowdhury, the “responsible AI” lead at Accenture. Every day was a complex game of translating jargon-laden speak between data scientists, lawyers, and executives. The goal? Ensuring the AI models were not only accurate but ethically sound. And let’s be frank—it was like trying to solve a jigsaw puzzle in the dark.

The translation woes: Auditing a single AI model became an endeavor stretching over months. The crux of the problem? There wasn’t a common language or a centralized tool to make the process smoother. But as they say, where there’s a will (or in this case, a lot of frustration), there’s a way.

🌟 Key lesson: If you constantly find yourself in the middle of a problem, maybe it’s an opportunity in disguise.

Enter Parity AI, Rumman’s brainchild born in the latter part of 2020. With a mission to streamline the auditing process of AI models, Parity AI is more than just a toolset. It’s a translator, guide, and mentor rolled into one. Clients can now decide what they need: a bias check, a legal compliance review, or something else, and then get concise, actionable recommendations. Imagine shifting from months of auditing to just a few weeks.

It’s like upgrading from a rickety bicycle to a sleek sports car!

🌟 Key lesson: Efficiency is key. If you can find a way to achieve the same goal in a fraction of the time without compromising quality, you’re on to a winner.

Now, Parity AI isn’t alone in this noble quest. The AI landscape is now dotted with startups eager to offer solutions ranging from bias-mitigation to explainability platforms. And while their initial clientele hailed from heavily regulated industries, the winds have shifted. The media’s spotlight on bias and privacy issues, coupled with a looming shadow of potential regulation, has companies queuing up. Some want to wear the badge of responsibility, while others are looking to dodge future regulatory bullets.

Rumman Chowdhury puts it aptly in her chat with MIT Technology Review: “So many companies are really facing this for the first time. Almost all of them are actually asking for some help.”

🌟 Key lesson: Being proactive in addressing issues, especially in areas as dynamic as AI, is just good business. Plain and simple.

Parity AI is carving a niche by bridging the AI ethics conversation gap, proving that when innovation meets responsibility, the possibilities are endless.

Player 7: Google

Ever paused a moment to wonder how your favorite search engine seems to know exactly what you’re looking for? Or how your voice assistant can differentiate between “dessert” and “desert”? At the heart of these everyday marvels lies AI. And where there’s AI, there’s the constant challenge of making it ethical.

Enter Google. As one of the world’s leading tech giants, the spotlight on its AI ethics is brighter than most. So, how does Google shine or occasionally stumble under this scrutiny?

💡See how WellSaid compares to Google’s TTS here!

An ethical vision: Google is all about ensuring that the AI serving up that information is unbiased and inclusive. With its rigorous human-centered design approach and deep dives into raw data, Google is setting benchmarks. They’re not just theorizing but offering practical advice on building AI that stands up for fairness and inclusion.

🌟 Key lesson: Design with humanity in mind. Algorithms may be built with code, but their impact is very much human.

Drawing the line: Google’s not shy about setting boundaries. While the allure of AI applications in weapons and surveillance might be tempting for many, Google has drawn a firm line. Their AI won’t be a part of technologies that infringe on human rights. And while at it, they’re also diving deep into areas like skin tone evaluation in machine learning—a step forward in inclusivity.

🌟 Key lesson: Just because you can doesn’t mean you should. Ethical lines in tech are crucial.

However, the road to ethical AI hasn’t been without its bumps for Google. Their commitment to the cause is evident with a dedicated 10-member team focusing solely on ethical AI research. But the journey hit a rocky patch when Google decided to part ways with 2 leading figures from their AI ethics research team. The move sent ripples across the tech community and brought Google’s commitment under the microscope. Doubts arose.

Is Google truly ready to embrace criticism, or will it muffle voices that dare to critique?

Despite the controversy, Google hasn’t hit the brakes on its ethical AI journey. Since 2018, it’s been pedal to the metal with various initiatives. Be it training based on the “Ethics in Technology Practice” project, the enlightening “AI Ethics Speaker series”, or the introduction of fairness modules in their free Machine Learning crash courses—Google is in it for the long haul.

🌟 Key lesson: Commitment to ethics isn’t a one-time act. It’s an ongoing effort with highs, lows, and the determination to keep going.

Google’s endeavor in ethical AI showcases a blend of intent, action, and occasional controversy. It’s a testimony to the fact that the path to ethical AI isn’t straightforward, but with persistence, even giants can chart a course that’s both innovative and responsible.

Player 8: Crendo AI

Let’s set the stage, shall we? The world of AI is like the Wild West, with pioneers venturing into unknown territories, making groundbreaking discoveries, but also grappling with newfound challenges. And if AI is the Wild West, then ethics in AI is the sheriff in town. But not to worry! For companies navigating these murky waters, Credo AI is here to offer a lifeboat of responsible governance.

The Credo AI magic: If AI were a dish, Credo AI’s Responsible AI Governance Platform would be the secret sauce. Here’s how to see it—essential features that seamlessly assess Responsible AI and translate these assessments into tangible risk scores across areas like fairness, performance, privacy, and security. So like a health check-up for your AI, ensuring it’s in tiptop shape. Even better, Credo AI is also prepped for the changing AI landscape. With out-of-the-box regulatory readiness, this platform is one step ahead, setting guardrails that reflect both current industry standards and the looming wave of AI regulations.

🌟 Key lesson: Adaptability is key. Being future-ready doesn’t just mean embracing upcoming tech but also forthcoming governance.

Empowering through ethical standards: Credo AI goes beyond checks and balances. It’s about empowerment. Their overarching goal? Enabling organizations to whip up AI solutions that uphold the highest ethical standards. The beauty of Credo AI lies in its comprehensive approach—offering real-time, context-driven governance of AI.

🌟 Key lesson: Governance is not a bottleneck. When done right, it’s the wind beneath the wings of innovation.

Trusted by the best: Trust isn’t given—it’s earned. And Credo AI has earned it in spades. Working with an array of companies, from the financial maestros to tech titans, insurance innovators, and even government entities, Credo AI has become the go-to for crafting AI governance platforms. Their holistic approach provides layers of trust, allowing businesses to harness the power of AI for positive outcomes without the nagging worry of ethical missteps.

🌟 Key lesson: Building trust is paramount. A trusted partner can guide your every step.

Credo AI can be seen as a beacon for companies venturing into the dynamic realm of AI. By offering a guiding hand, it ensures that the AI evolution is ethically sound. And in a rapidly changing world, that’s a credo we can all stand behind.

Player 9: DeepChecks

Ever built something with so much effort, only to later wonder if there was a glitch in it? Imagine spending millions on constructing an intricate machine learning (ML) model only to face the unnerving possibility that it could have flaws. Flaws that might go unnoticed for years. Flaws that could cripple its efficiency and your trust!

The genesis: Before “machine learning” was the buzzword it is today, a group of pioneers were already knee-deep in it. Leading top-tier research groups and navigating the complex terrains of varied datasets and business constraints, they unearthed a glaring gap. While their organizations invested heavily in building ML models, they lacked the means to effectively “check” these models for issues. Here’s the kicker: unlike traditional software, ML systems can fail silently. This means certain glitches could lurk, undetected, for ages. The need for a solution was pressing. And, thus, Deepchecks was created.

🌟 Key lesson: Innovation often stems from personal experience. Identifying gaps in the existing systems and processes can lead to groundbreaking solutions.

Mission possible: Deepchecks’s mission is to bestow organizations with the power to command their ML systems. Their magic wand? A comprehensive tool that continuously, versus sporadically, monitors them. In essence, it’s like having a 24/7 watchdog, ensuring your ML systems remain faultless.

🌟 Key lesson: Continuous improvement is the name of the game. Regularly testing and refining models is essential to ensure they operate at peak efficiency.

Ethics and open source, hand in hand: Beyond their core offering, Deepchecks is committed to transparency and knowledge-sharing. How? By publishing research documents and curating a detailed AI ethics glossary. But that’s not all. Initially, Deepchecks Open Source was the Sherlock Holmes of the research phase, focusing solely on testing ML. But come June 2023, this platform has evolved to offer both testing and monitoring, ensuring data scientists and ML engineers can execute a holistic validation process while staying within the open-source realm.

🌟 Key lesson: Adapt and expand. Deepchecks recognized the changing needs of the industry and tailored their offering to meet those demands head-on.

Seamless integration FTW: The beauty of Deepchecks Open Source? It’s designed for seamless integration. With just a handful of code lines, you can embed it into your CI/CD scripts, ensuring that your rejuvenated model remains glitch-free when pushed to production.

🌟 Key lesson: User-friendly solutions are the future. Making complex tools easily integrable ensures wider adoption and trust.

Deepchecks stands as a testament to proactive problem-solving. They spotted a gap, addressed it, and continue to evolve, ensuring ML models worldwide operate as intended. So, the next time you marvel at an ML system’s prowess, know that behind the scenes, companies like Deepchecks are ensuring it remains flawless.

Conclusion: Ethics in AI—growing, living, and transforming

As we navigate this brave new world of AI, it’s evident that ethical AI isn’t a static concept carved in stone but a living, breathing, and ever-evolving field. A paradigm that, much like a sapling, grows more robust with the right care and attention. At its core, integrating ethical AI practices is about identifying and embedding these principles from the get-go. By baking ethics into the very foundation of AI projects, the road ahead becomes smoother, the course clearer, and the outcomes more equitable.

Let’s face it, the surge in ethical AI is in full swing. Meaning, it’s a critical call to arms. The staggering shift in executives’ perspective is proof. Back in 2018, less than 1/2 of them viewed AI ethics as essential. Fast forward to 2021, and this figure catapulted to a whopping 75%. This stat is a reflection of our collective awakening. We stand at an inflection point, where the decisions we make today will echo into the future. The responsibility? It’s colossal, and it rests on all our shoulders.

We’re on a quest to continually demystify this nexus of AI and ethics. Our promise? To keep you updated, inspired, and informed. So, whether you’re an AI aficionado or just dipping your toes, bookmark our blog. Dive into round-ups, explore insights, and join us in championing a world where tech evolves ethically.

In closing, as we hurtle into the future, let’s remember: Building a world powered by AI without an ethical backbone is like constructing skyscrapers on sand. It might stand tall for a while, but when the winds of change blow, it’s bound to crumble. So let’s endeavor for a future where our innovations are as principled as they are pioneering, ensuring a legacy that future generations will look back on with pride.