Audio by Hannah A. using WellSaid Labs

This post is from the WellSaid Research team, exploring breakthroughs and thought leadership within audio foundation model technology.

In an era where digital voices are becoming more integral to our daily interactions, the quest for natural-sounding, contextually aware text-to-speech (TTS) technology has never been more critical. In light of this, the conversation around context becomes paramount, as highlighted by advancements like Google’s Gemini 1.5 and Anthropic’s recent product updates, which include features like 100k context windows.

These developments emphasize the importance of context in enabling large language models to gain more comprehensive understandings of the data they process. In turn, this enhances AI performance, especially in the realm of voice technologies, where such advancements lead to more intuitive responses and natural predictions in user interactions.

Meaning, by incorporating this broader dialogue on the importance of context, we aim to better connect with our audience. Thus underscoring the evolution and potential of voice technologies.

At WellSaid Labs, we’re pioneering an approach that sets us apart from conventional TTS companies. Our flagship TTS model goes beyond simply mimicking human speech—it understands and conveys the nuances of English taking into account the full context of the speech.

Here’s how we’re doing it!

Bridging the gap between phonemes and meaning

Phonemes, the smallest units of sound in a language, are crucial for accurate speech synthesis. English alone comprises around 44 phonemes, varying with dialects. However, on their own, these phonemes don’t carry meaning or context.

This traditional focus on phonemes represents an older methodology within the realm of voice synthesis, primarily used by many of today’s leading deep learning models. Such approaches, while foundational, overlook the holistic creation of voice-over content, neglecting the broader context that contributes to truly natural and engaging voice experiences.

All-in-all, this points to a need for a shift towards models that can both understand phonetic details while also integrating contextual understanding to elevate the speech synthesis process, bringing it to new levels of realism and relatability.

For instance, the vocalized “th” sound in “those” versus the unvocalized “th” in “thread” illustrates this challenge—without context, the sounds lack grammatical, semantic, or sentimental value.

Our approach: Contextual embeddings

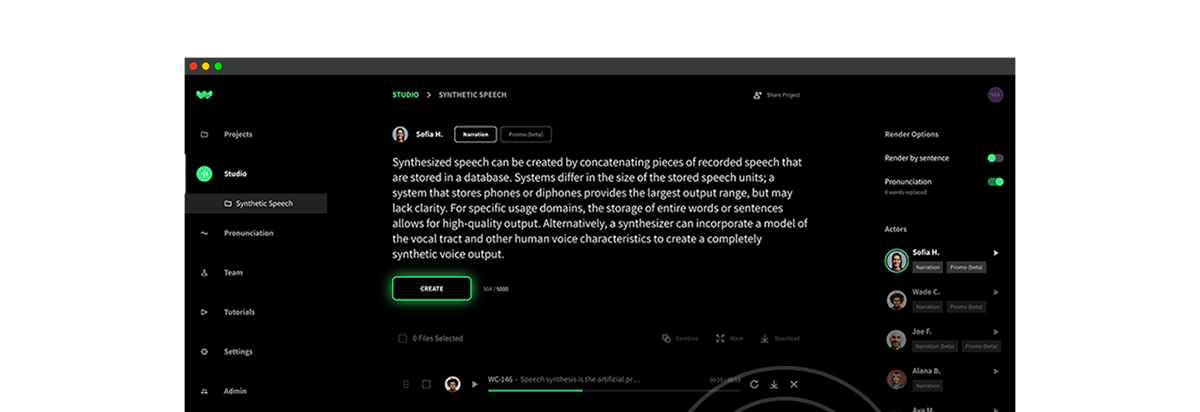

At WellSaid Labs, we transcend the limitations of phonemic preprocessing by training our model on full contexts. Meaning, unlike traditional methods that train TTS systems on isolated phonemes, we train our model on entire voice over scripts. This requires leveraging the power of Large Language Modeling (LLM).

By running complete scripts through an LLM layer, we obtain contextual embeddings for each word within its specific situation. WellSaid Labs has developed one of the first models in the industry that models English instead of just phonetics, taking into account the full context of the voice-over script. We are really proud of taking another step to build natural TTS.

Training on English directly

Our models learn English within its full context. By processing entire scripts at a time, our models gain an understanding of language that is deeply rooted in context. This contextual awareness is what enables WellSaid Labs to generate speech that’s not only natural-sounding but also contextually appropriate. Our approach contrasts sharply with conventional models that might produce confused or neutral outputs when faced with a variety of deliveries for a given phrase or word.

The role of LLM technology and contextual embeddings

Our unique training process involves a sophisticated LLM layer that provides contextual embeddings for each word. These embeddings feed directly into our models, allowing them to learn a complex combination of data. The result is a TTS model capable of understanding and generating speech that accurately reflects the intended meaning and nuances of the original script.

Why context matters

The significance of context in speech cannot be overstated. While the current research community widely acknowledges the importance of context for accurate predictions, WellSaid Labs has taken significant strides in maximizing the potential of contextual learning. Our capability to train models that learn context up to extensive lengths ensures that the most critical aspects of voice—the immediate context—are captured and conveyed effectively.

This emphasis on context aligns with extensive literature on its pivotal role in human learning and interaction. For instance, the complexity of understanding a seemingly simple question like “How is Grandma?” reveals both the depth of context sensitivity required for authentic human-like responses. This question’s answer varies dramatically based on numerous factors. These may be time, circumstance, and the relationship between the speakers, illustrating the nuanced nature of context in human communication.

Moreover, the challenge of context extends beyond human conversation into the realm of AI. Despite advancements, the incorporation of context remains a significant hurdle. These systems often struggle with adapting to new, unseen environments and/ or maintaining continuity over multiple interactions, which is crucial for applications ranging from manufacturing to medical practice.

Even advanced models like OpenAI’s GPT-3 and Google’s PaLM, though capable of tracking local context to an extent, still face limitations when dealing with the multifaceted and evolving nature of real-world context. The development of retrieval-based query augmentation and late binding context approaches represents promising steps towards bridging this gap–mainly through more sophisticated handling of context.

Conclusion: Looking ahead

At WellSaid Labs, our commitment to understanding and leveraging the full context of language sets us apart, ensuring that our TTS models deliver unparalleled naturalness and accuracy.

As we continue to innovate and expand our capabilities, we invite you to stay connected. Follow our product updates, and join us on this exciting journey towards creating digital voices that truly understand and resonate with their audience.

Follow us for the latest updates on our groundbreaking TTS technology and discover new capabilities that are transforming the landscape of digital communication! 🗣