At WellSaid Labs, we consider it an honor that we get to help innovative people amplify their creativity with our AI voices. It is exciting to see the ways our technology can be used to convey information, entertain, and enhance experiences. But with that honor comes a responsibility that we take very seriously, making sure that our voices are used ethically. Here, we explore the importance and challenges of content moderation.

Why do we conduct content moderation?

We created a product to empower creatives and makers, so why would we set boundaries on what they do? The answer is complex. The purpose of content moderation is to protect the listeners, the voice actors, WellSaid employees, our community, and the general public. Additionally, it is vital to adhere to our own company values. Let’s dig in.

Voice Actor Rights

When a voice actor takes on a project, the actor has the opportunity to read through the material they must record. If they find the material objectionable, they can choose to not do the project.

When voice actors license their vocal signature to us, they hand us the job of reviewing each piece of content for them. Voice professionals trust us with making sure that AI avatars created with their voice will not be used in harmful ways. Content moderation is our commitment to the voice actor community that we will protect their valuable investment with us.

Anti-Bullying Precautions

It is exceptionally rare, but we have found instances of our AI voices being used to say unpleasant things about other people, language that qualifies as cyberbullying. Whether it is a high school student using a free trial of our Studio or someone joking around with a friend, we will not permit use of our tool to hurt others.

Aligning with Our Values

Two of our primary company values apply to content moderation. The first applies to the voice actor rights. We have a commitment to consent, which means we only produce a voice avatar with the explicit permission of the individual whose voice we use to create an AI representation. That means no deep fakes. It also reinforces the protection we offer our voice actors, who have not consented to producing harmful content.

Another company value is transparency. This means that we clearly communicate our content moderation guidelines to customers in our Terms of Service. Anyone who does business with WellSaid Labs knows up front that we will not tolerate violations of our ethical code.

What does content moderation encompass?

While defining harmful content seems simple, this question is an ongoing discussion for us. We have defined parameters that cover a whole range of voice content that we don’t want associated with us, nor the actors we employ.

These are the kinds of content we moderate:

- Sexually explicit content: We do not produce content that is pornographic in nature.

- Abusive language: This includes language depicting physical violence or threats.

- Extreme obscenity: Our voice actors prefer their avatars not give voice to this language.

- Hate speech: Renderings of racist or other hate speech is forbidden.

- Unlawful language: Speech that violates federal laws is not allowed in WSL Studio.

- Impersonation: We do not allow language that attempts to impersonate others without consent.

Of course, there are complex examples of these types of monitored use. Some of our customers are healthcare educators who need to describe anatomical processes. Others are creative writing enthusiasts who may depict sensitive material for a story. We try to take all these cases into account with our content moderation process.

How does content moderation work?

As our customer base and daily number of renderings grows exponentially, our content moderation must evolve as well.

How It Was Done in the Past

Until very recently, we relied on a highly manual process of content moderation. Our staff periodically reviewed rendered content that flagged our filters. Then, we had to reach out to the flagged producers or freeze their accounts, one by one. Over time, this method became less than ideal in two ways.

First of all, by the time the content got to review, obviously it already existed. We wanted to find a way that would prevent harmful content from ever being produced. Secondly, this process was labor intensive and inefficient for our staff.

In our last release, we decided to take a more preventative approach to moderating content.

A Fresh Take on Content Moderation

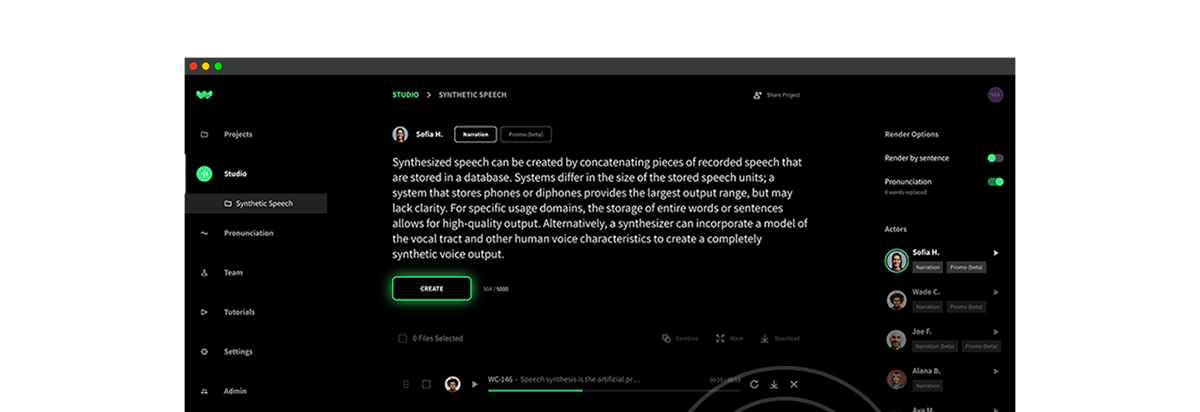

In our most recent software update, we have automated the content moderation step right at the moment of rendering. Using sophisticated tools that are built to scan for even subtle variations of the kind of content we prohibit, we are now able to block it at the rendering step.

When a user attempts to render text that flags our moderation software, we can bar it from production until we conduct a review. This safeguard works for all of our voice products: Studio, API, and Custom Voices.

Still A Human Touch

Language use is an incredibly nuanced field. As we mentioned above, we know that there can be exceptions to even these rules. Because of this, we prioritize still having a human layer to the content moderation process.

Someone on our team receives an alert when submitted content violates our Terms of Service. At that point, we verify that the language was indeed in violation, or if we need to investigate more, before harmful content leaves the Studio.

Additionally, we continue to manually spot check content that may be on the border of prohibited. Our goal is to verify that our automated methods are neither missing harmful language, nor unnecessarily flagging valid content. It is a process that continues to evolve.

The Future of Content Moderation at WSL

As with any part of our technology at WellSaid, content moderation will change and improve over time.

For one, we will be adding new languages to our Studio in 2022. The subtleties and nuances of getting other languages right also extend to the content moderation in those languages. We are actively thinking through what tools and resources we will use to ensure that our voice actors and users are protected in these new arenas.

At WellSaid, this is a challenge we are excited and honored to meet. Our goal of creating voices worth listening to and our commitment to “AI for good” motivate us to continually improve content moderation. With the support of our incredible customers, we can increase the potential of AI voice every day.