Audio by Abbi D. using WellSaid

In recent years, the rapid growth of AI has revolutionized industries and everyday life. From tools that assist in automating once time-consuming tasks to technologies that enhance creativity, AI has proven to be a useful and efficient tool. However, like many powerful innovations, AI can be used for alternative, more harmful purposes. Among the most troubling is the rise of deepfakes—synthetic media in which a person’s likeness is convincingly manipulated to say or do something they have not actually done, and without their consent. While these deepfakes can be used for entertainment or artistic expression, they also open doors to unlawful and harmful activities, such as misinformation, fraud, and privacy violations.

What are Deepfakes?

Deepfakes are AI-generated videos, audio clips, or images that hyper-realistically simulate real people’s appearances or voices, without their consent. The term “deepfake” originates from “deep learning” (a branch of AI) and “fake,” as it merges machine learning techniques with fabricated media. By analyzing vast amounts of existing footage, deepfake algorithms can produce realistic simulations of people, seamlessly altering their expressions, movements, or speech.

Some famous deepfake examples include videos of celebrities and politicians saying things or performing actions they never performed. For instance, deepfake videos have been created featuring public figures like Barack Obama, Tom Cruise, and Mark Zuckerberg, sometimes for comedic or satirical purposes. However, when misused, deepfakes can be used to spread false information and manipulate public opinion, such as when a deepfake of President Biden was used the night before the 2024 January primary elections in New Hampshire.

How are Deepfakes Created?

Most synthetic media creation requires advanced machine learning algorithms and neural networks, particularly deep learning techniques. These algorithms require vast amounts of data—hours of footage or thousands of images of the target subject. The process involves training a model to recognize patterns in facial movements, expressions, and voice characteristics and then generating an artificial version that can mimic these traits in new contexts.

The media becomes a problematic deepfake when it is created and used without the subject’s explicit permission and is used in a way that may be harmful or unlawful.

When are Deepfakes Used Most Often?

Deepfakes are used across many industries and by different groups, often for entertainment purposes. For example, filmmakers have used these methods to reverse the age of actors in films or resurrect deceased actors for new roles.

However, many people take advantage of the technology for malicious purposes. Cybercriminals can deploy deepfakes to impersonate corporate executives or political leaders, conducting fraudulent activities like wire transfer scams or influencing public discourse with fake speeches or statements. Additionally, deepfakes have been used in personal attacks, such as non-consensual adult content or to harass individuals by falsifying their involvement in illegal or unethical activities.

What are the Dangers of Deepfakes?

There are a number of dangers when it comes to deepfakes, impacting individuals, corporations, and society at large. One of the greatest concerns is the erosion of trust. Deepfakes make it harder to distinguish between real and fake media, leading to skepticism toward authentic information and heightening the risk of believing false information. This can have profound implications, especially during election seasons or in situations where public opinion is swayed by what people see and hear via social media.

Another major concern is the potential for identity theft and financial fraud. Scammers can use deepfakes to impersonate high-profile individuals, either by voice or video, to conduct unauthorized transactions or steal sensitive information. For example, in 2019, a deepfake audio clip was used to trick a CEO into transferring €220,000, believing he was following direct orders from his superior.

Other misuses of deepfakes include blackmail and harassment, which can take a serious psychological toll on the victims of these types of attacks, and the damage to their reputations may be irreparable.

Four Ways to Identify Deepfakes

In this age of information overload, it is more important than ever to stay alert and develop strong analytical skills in order to recognize when you may be interacting with a deepfake. Here are four ways to identify when a piece of media may have been created using deepfake technology:

Unnatural Tone or Conversation

One of the most common signs of a deepfake audio clip is an unnatural or flat tone. Human voices are filled with nuanced emotion and natural variation in pitch and rhythm. A deepfake voice may lack the natural rise and fall of human speech, sounding overly robotic and emotionless.

Also, in conversational deepfakes, pay attention to nuanced interactions, like how the speaker reacts in dialogue. AI models may not fully grasp social cues or timing, leading to delayed responses, awkward pauses, or inappropriate facial reactions to certain statements. These subtle conversational errors can break the illusion of a deepfake, signaling that the video has been tampered with.

Off-putting Visual Queues

Keep an eye out for where the person’s speech does not perfectly align with their lip movements. This subtle desynchronization can make it look as though the person’s voice is coming from a separate source, even when the audio is convincing.

Additionally, inconsistent facial expressions or awkward body language can be a major red flag. Deepfake algorithms sometimes struggle to replicate natural human microexpressions, such as subtle eye movements, small muscle twitches, or the way skin reacts to light. These imperfections can make the speaker seem slightly off, almost robotic, with unnatural blinking patterns or stiff, exaggerated facial expressions.

Background Noise or Reverberation

Background noise and vocal reverberation are other important indicators to listen for when identifying deepfakes. In real-world recordings, environmental factors like room acoustics, background chatter, or outdoor sounds will often be present, lending a natural sense of depth and space to the audio. Deepfakes, however, struggle to recreate these nuanced environmental cues consistently.

For instance, in a deepfake recording of a public figure delivering a speech, the voice might sound too isolated, as if it were recorded in a vacuum, without any interaction with the surrounding environment.

Pronunciation or Awkward Pauses

Another subtle way to identify deepfakes is by focusing on odd pronunciations of common words or awkward pauses in conversation. Since AI is trained on large datasets, it may not always capture the unique pronunciation habits of an individual speaker. This can result in slight mispronunciations or over-articulated words, which can feel unnatural in context.

Similarly, deepfakes can struggle with conversational timing, leading to awkward pauses or unnatural gaps between words. Humans tend to adjust their pacing and pauses dynamically during speech, especially in dialogue, whereas deepfake-generated voices might include pauses that seem out of place or poorly timed.

Safeguarding Yourself Against deepfakes

As deepfakes become more prevalent, it’s essential to take steps to protect yourself from being deceived or manipulated by them. Here are some tips to safeguard yourself:

- Media Literacy and Skepticism: Always approach content with a critical eye. If something feels off about a video or audio clip, it’s worth investigating further. Seek out multiple sources to verify the authenticity of the content before accepting it as true.

- Two-Factor Verification: For important communications, especially in professional or personal matters, using two-factor authentication can help verify that the person you are interacting with is who they say they are. A phone call or a live video chat can also provide assurance, as it’s harder to create a convincing real-time deepfake.

- Raise Awareness: Share your knowledge about deepfakes with others to help them recognize potential threats. Education is key in preventing people from falling victim to scams or misinformation fueled by deepfakes.

Future of deepfakes and Legislative Restrictions

As deepfake technology continues to advance, it is likely to become even more difficult to distinguish between real and fake media. The rise of hyper-realistic deepfakes could pose significant threats to personal privacy and national security. Governments and organizations are already beginning to recognize the dangers and are working toward implementing legislative restrictions to curb the misuse of deepfakes.

Some countries have introduced laws that penalize the creation or distribution of deepfakes with malicious intent. Additionally, social media platforms are developing policies to remove deepfake content that can cause harm or spread misinformation

In the future, legislation will likely continue to evolve to address the growing threats posed by deepfakes, with increased regulation and accountability for those who produce or distribute harmful content.

Recently, the state of California passed two bills, including the California AI Transparency Act, which enforces the labeling of AI content and provides other protections for people such as actors and performers looking to safeguard their digital likenesses in audio and visual productions, including those who are deceased.

Additionally, the state passed three bills to combat deepfake election content that could spread harmful misinformation and unfairly impact the upcoming election. These legislations are a move in the direction of ensuring the responsible use of AI and other digital media technologies.

When it comes to actors and performers, these laws include:

- AB 2602 requires contracts to specify the use of AI-generated digital replicas of a performer’s voice or likeness, and the performer must be professionally represented in negotiating the contract.

- AB 1836 prohibits commercial use of digital replicas of deceased performers in films, TV shows, video games, audiobooks, sound recordings and more, without first obtaining the consent of those performers’ estates.

When it comes to election related content:

- AB 2655 requires large online platforms to remove or label deceptive and digitally altered or created content related to elections during specified periods, and requires them to provide mechanisms to report such content.

- AB 2839 expands the timeframe in which a committee or other entity is prohibited from knowingly distributing an advertisement or other election material containing deceptive AI-generated or manipulated content.

- AB 2355 requires that electoral advertisements using AI-generated or substantially altered content feature a disclosure that the material has been altered.

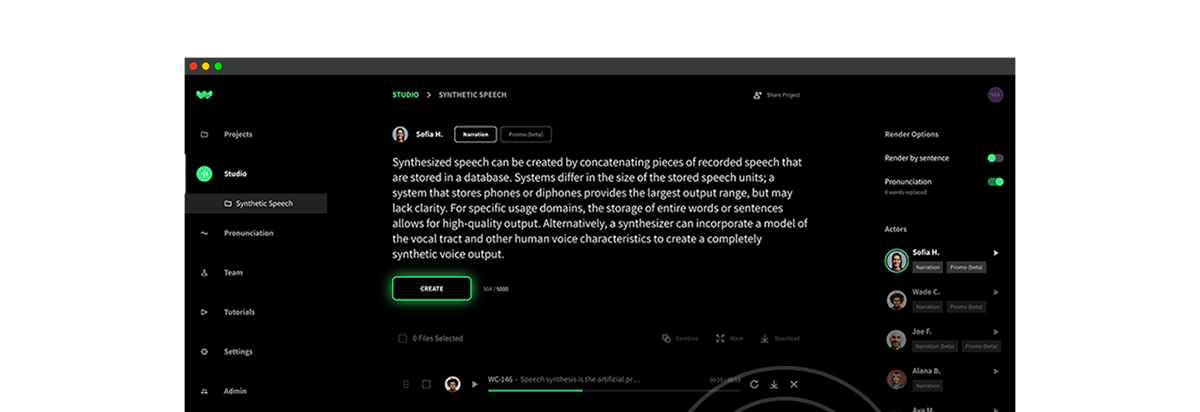

WellSaid and deepfakes

WellSaid stands firmly against the creation and use of deepfakes in any form. We will never clone a person’s voice without their explicit consent, and our voices are created with the written consent of voice actors whom we properly compensate for their time and talent.

We also operate on a closed-data system that ensures our customer data is secure and protected from inappropriate use, and is never shared externally or used to train our model. We also moderate our content to reduce the risk that our voices are being used in unlawful or harmful ways.

Want to test WellSaid’s ethically-sourced AI Voice platform?

Putting it All Together

While deepfake technology has transformative potential for entertainment and creative expression, its misuse presents serious threats to privacy, security, and public trust. The ability to convincingly manipulate audio and video can fuel misinformation, fraud, and personal attacks, making it critical for individuals to be aware of the dangers. By remaining vigilant and aware of current deepfake trends, you can better protect yourself from these risks. Additionally, upcoming legislative efforts are an important step toward mitigating the impact of deepfakes and holding generative AI companies accountable for ethical practices.